![New in ASP.NET Core 3: Service provider validation]()

In this post I describe the new "validate on build" feature that has been added to ASP.NET Core 3.0. This can be used to detect when your DI service provider is misconfigured. Specifically, the feature detects where you have a dependency on a service that you haven't registered in the DI container.

I'll start by showing how the feature works, and then show some situations where you can have a misconfigured DI container that the feature won't identify as faulty.

It's worth pointing out that validating your DI configuration is not a new idea - this was a feature of StructureMap I used regularly, and it's spiritual successor, Lamar, has a similar feature.

The sample app

For this post, I'm going to use an app based on the default dotnet new webapi template. This consists of a single controller, the WeatherForecastService, that returns a randomly generated forecast based on some static data.

To exercise the DI container a little, I'm going to extract a couple of services. First, the controller is refactored to:

[ApiController]

[Route("[controller]")]

public class WeatherForecastController : ControllerBase

{

private readonly WeatherForecastService _service;

public WeatherForecastController(WeatherForecastService service)

{

_service = service;

}

[HttpGet]

public IEnumerable<WeatherForecast> Get()

{

return _service.GetForecasts();

}

}

So the controller depends on the WeatherForecastService. This is shown below (I've elided the actual implementation as it's not important for this post):

public class WeatherForecastService

{

private readonly DataService _dataService;

public WeatherForecastService(DataService dataService)

{

_dataService = dataService;

}

public IEnumerable<WeatherForecast> GetForecasts()

{

var data = _dataService.GetData();

return new List<WeatherForecast>();

}

}

This service depends on another, the DataService, shown below:

public class DataService

{

public string[] GetData() => new[]

{

"Freezing", "Bracing", "Chilly", "Cool", "Mild", "Warm", "Balmy", "Hot", "Sweltering", "Scorching"

};

}

That's all of the services we need, so all that remains is to register them in the DI container in Startup.ConfigureServices:

public void ConfigureServices(IServiceCollection services)

{

services.AddControllers();

services.AddSingleton<WeatherForecastService>();

services.AddSingleton<DataService>();

}

I've registered them as singletons for this example, but that's not important for this feature. With everything set up correctly, sending a request to /WeatherForecast returns a forecast:

[{

"date":"2019-09-07T22:29:31.5545422+00:00",

"temperatureC":31,

"temperatureF":87,

"summary":"Sweltering"

}]

Everything looks good here, so let's see what happens if we mess up the DI registration.

Detecting unregistered dependencies on startup

Let's mess things up a bit, and "forget" to register the DataService dependency in the DI container:

public void ConfigureServices(IServiceCollection services)

{

services.AddControllers();

services.AddSingleton<WeatherForecastService>();

}

If we run the app again with dotnet run, we get an exception, a giant stack trace, and the app fails to start. I've truncated and formatted the result below:

Unhandled exception. System.AggregateException: Some services are not able to be constructed

(Error while validating the service descriptor

'ServiceType: TestApp.WeatherForecastService Lifetime: Scoped ImplementationType:

TestApp.WeatherForecastService': Unable to resolve service for type

'TestApp.DataService' while attempting to activate 'TestApp.WeatherForecastService'.)

This error makes it clear what the problem is - "Unable to resolve service for type 'TestApp.DataService' while attempting to activate 'TestApp.WeatherForecastService'". This is the DI validation feature doing it's job! It should help reduce the number of DI errors you discover during normal operation of your app, by throwing as soon as possible on app startup. It's not as useful as an error at compile-time, but that's the price of the flexibility a DI container provides.

What if we forget to register the WeatherForecastService instead:

public void ConfigureServices(IServiceCollection services)

{

services.AddControllers();

services.AddSingleton<DataService>();

}

In this case the app starts up fine, and we don't get any error until we hit the API, at which point it blows up!

Oh dear, time for the gotchas…

1. Controller constructor dependencies aren't checked

The reason the validation feature doesn't catch this problem is that controllers aren't created using the DI container. As I described in a previous post, the DefaultControllerActivator sources a controller's dependencies from the DI container, but not the controller itself. Consequently, the DI container doesn't know anything about the controllers, and so can't check their dependencies are registered.

Luckily, there's a way around this. You can change the controller activator so that controllers are added to the DI container by using the AddControllersAsServices() method on IMvcBuilder:

public void ConfigureServices(IServiceCollection services)

{

services.AddControllers()

.AddControllersAsServices();

services.AddSingleton<DataService>();

}

This enables the ServiceBasedControllerActivator (see my previous post for a detailed explanation) and registers the controllers in the DI container as services. If we run the app now, the validation detects the missing controller dependency on app startup, and throws an exception:

Unhandled exception. System.AggregateException: Some services are not able to be constructed

(Error while validating the service descriptor

'ServiceType: TestApp.Controllers.WeatherForecastController Lifetime: Transient

ImplementationType: TestApp.Controllers.WeatherForecastController': Unable to

resolve service for type 'TestApp.WeatherForecastService' while attempting to

activate'TestApp.Controllers.WeatherForecastController'.)

This seems like a handy solution, but I'm not entirely sure what the trade offs are, but it should be fine (it's a supported scenario after all).

We're not out of the woods yet though, as constructor injection isn't the only way to inject dependencies into controllers…

2. [FromServices] injected dependencies aren't checked

Model binding is used in MVC actions to control how an action method's parameters are created, based on the incoming request, using attributes such as [FromBody] and [FromQuery].

In a similar vein, the [FromServices] attribute can be applied to action method parameters, and those parameters will be created by sourcing them from the DI container. This can be useful if you have a dependency which is only required by a single action method. Instead of injecting the service into the constructor (and therefore creating it for every action on that controller) you can inject it into the specific action instead.

For example, we could rewrite the WeatherForecastController to use [FromServices] injection as follows:

[ApiController]

[Route("[controller]")]

public class WeatherForecastController : ControllerBase

{

[HttpGet]

public IEnumerable<WeatherForecast> Get(

[FromServices] WeatherForecastService service)

{

return service.GetForecasts();

}

}

There's obviously no reason to do that here, but it makes the point. Unfortunately, the DI validation won't be able to detect this use of an unregistered service. The app will start just fun, but will throw an Exception when you attempt to call the action.

The obvious solution to this one is to avoid the [FromServices] attribute where possible, which shouldn't be difficult to achieve, as you can always inject into the constructor if needs be.

There's one more way to source services from the DI container - using service location.

3. Services sourced directly from IServiceProvider aren't checked

Let's rewrite the WeatherForecastController one more time. Instead of directly injecting the WeatherForecastService, we'll inject an IServiceProvider, and use the service location anti-pattern to retrieve the dependency.

[ApiController]

[Route("[controller]")]

public class WeatherForecastController : ControllerBase

{

private readonly WeatherForecastService _service;

public WeatherForecastController(IServiceProvider provider)

{

_service = provider.GetRequiredService<WeatherForecastService>();

}

[HttpGet]

public IEnumerable<WeatherForecast> Get()

{

return _service.GetForecasts();

}

}

Code like this, where you're injecting the IServiceProvider, is generally a bad idea. Instead of being explicit about it's dependencies, this controller has an implicit dependency on WeatherForecastController. As well as being harder for developers to reason about, it also means the DI validator doesn't know about the dependency. Consequently, this app will start up fine, and throw on first use.

Unfortunately, you can't always avoid leveraging IServiceProvider. One case is where you have a singleton object that needs scoped dependencies as I described here. Another is where you have a singleton object that can't have constructor dependencies, like validation attributes (as I described here). Unfortunately there's no way around those situations, and you just have to be aware that the guard rails are off.

A similar gotcha that's not immediately obvious is when you're using a factory function to create your dependencies.

4. Services registered using factory functions aren't checked

Let's go back to our original controller, injecting WeatherForecastService into the constructor, and registering the controllers with the DI container using AddControllersAsServices(). But we'll make two changes:

- Forget to register the

DataService.

- Use a factory function to create

WeatherForecastService.

When I say a factory function, I mean a lambda that's provided at service registration time, that describes how to create the service. For example:

public void ConfigureServices(IServiceCollection services)

{

services.AddControllers()

.AddControllersAsServices();

services.AddSingleton<WeatherForecastService>(provider =>

{

var dataService = new DataService();

return new WeatherForecastService(dataService);

});

}

In the above example, we provided a lambda for the WeatherForecastService that describes how to create the service. Inside the lambda we manually construct the DataService and WeatherForecastService.

This won't cause any problems in our app, as we are able to resolve the WeatherForecastService from the DI container using the above factory method. We never have to resolve the DataService directly from the DI container. We only need it in the WeatherForecastService, and we're manually constructing it, so there's no problems.

The difficulties arise if we use the injected IServiceProvider provider in the factory function:

public void ConfigureServices(IServiceCollection services)

{

services.AddControllers()

.AddControllersAsServices();

services.AddSingleton<WeatherForecastService>(provider =>

{

var dataService = provider.GetRequiredService<DataService>();

return new WeatherForecastService(dataService);

});

}

As far as the DI validation is concerned, this factory function is exactly the same as the previous one, but actually there's a problem. We're using the IServiceProvider to resolve the DataService at runtime using the service locator pattern; so we have an implicit dependency. This is essentially the same as gotcha 3 — the service provider validator can't detect cases where services are obtained directly from the service provider.

As with the previous gotcha, code like this is sometimes necessary, and there's no easy way to work around it. If that's the case, just be extra careful that the dependencies you request are definitely registered correctly.

An idea I toyed with is registering a "dummy" class in dev only, that takes all of these "hidden" classes as constructor dependencies. That may help catch registration issues using the service provider validator, but is probably more effort and error prone than it's worth.

5. Open generic types aren't checked

The final gotcha is called out in the ASP.NET Core source code itself: ValidateOnBuild does not validate open generic types.

As an example, imagine we have a generic ForcastService<T>, that can generate multiple types of forecast, T.

public class ForecastService<T> where T: new();

{

private readonly DataService _dataService;

public ForecastService(DataService dataService)

{

_dataService = dataService;

}

public IEnumerable<T> GetForecasts()

{

var data = _dataService.GetData();

return new List<T>();

}

}

In Startup.cs we register the open generic, but again forget to register the DataService:

public void ConfigureServices(IServiceCollection services)

{

services.AddControllers()

AddControllersAsServices();

services.Addingleton(typeof(ForecastService<>));

}

The service provider validation completely skips over the open generic registration, so it never detects the missing DataService dependency. The app starts up without errors, and will throw a runtime exception if you try to request a ForecastService<T>.

However, if you take a closed version of this dependency in your app anywhere (which is probably quite likely), the validation will detect the problem. For example, we can update the WeatherForecastController to use the generic service, by closing the generic with T as WeatherForecast:

[ApiController]

[Route("[controller]")]

public class WeatherForecastController : ControllerBase

{

private readonly ForecastService<WeatherForecast> _service;

public WeatherForecastController(ForecastService<WeatherForecast> service)

{

_service = service;

}

[HttpGet]

public IEnumerable<WeatherForecast> Get()

{

return _service.GetForecasts();

}

}

The service provider validation does detect this! So in reality, the lack of open generic testing is probably not going to be as big a deal as the service locator and factory function gotchas. You always need to close a generic to inject it into a service (unless that service itself is an open generic), so hopefully you should pick up many cases. The exception to this is if you're sourcing open generics using the service locator IServiceProvider, but then you're really back to gotchas 3 and 4 anyway!

Enabling service validation in other environments

That's the last of the gotchas I'm aware of, but as a final note, it's worth remembering that service provider validation is only enabled in the Development environment by default. That's because there's a startup cost to it, the same as for scope validation.

However, if you have any sort of "conditional service registration", where a different service is registered in Development than in other environments, you may want to enable validation in other environments too. You can do this by adding an additional UseDefaultServiceProvider call to your default host builder, in Program.cs. In the example below I've enabled ValidateOnBuild in all environments, but kept scope validation in Development only:

public class Program

{

public static void Main(string[] args)

{

CreateHostBuilder(args).Build().Run();

}

public static IHostBuilder CreateHostBuilder(string[] args) =>

Host.CreateDefaultBuilder(args)

.ConfigureWebHostDefaults(webBuilder =>

{

webBuilder.UseStartup<Startup>();

})

.UseDefaultServiceProvider((context, options) =>

{

options.ValidateScopes = context.HostingEnvironment.IsDevelopment();

options.ValidateOnBuild = true;

});

Summary

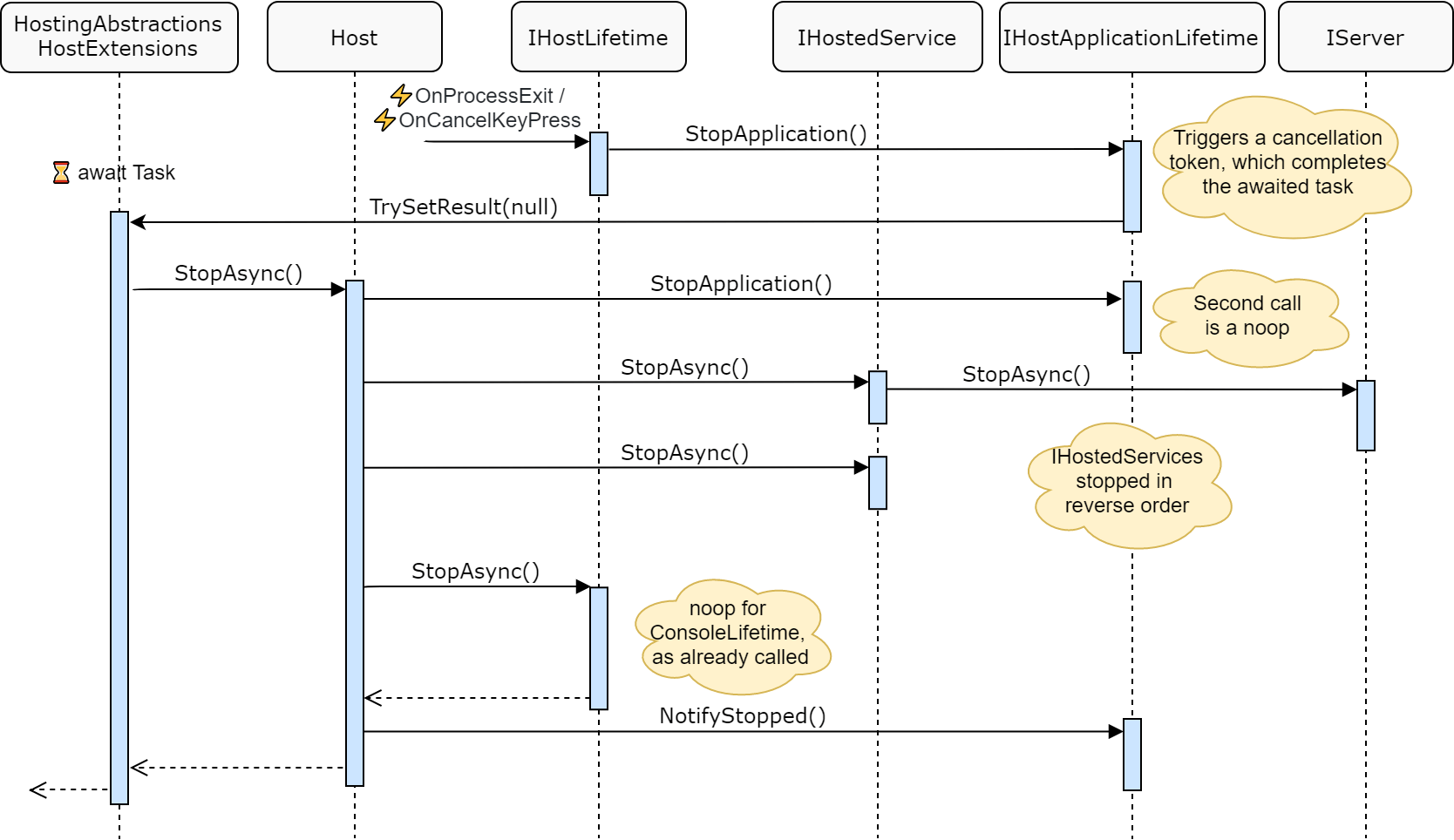

In this post I described the ValidateOnBuild feature which is new in .NET Core 3.0. This allows the Microsoft.Extensions DI container to check for errors in your service configuration when a service provider is first built. This can be used to detect issues on application startup, instead of at runtime when the misconfigured service is requested.

While useful, there are a number of cases that the validation won't catch, such as injection into MVC controllers, using the IServiceProvider service locator, and open generics. You can work around some of these, but even if you can't, it's worth keeping them in mind, and not relying on your app to catch 100% of your DI problems!