![A deep dive into the ASP.NET Core CORS library]()

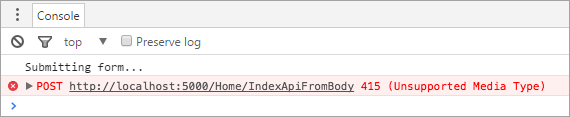

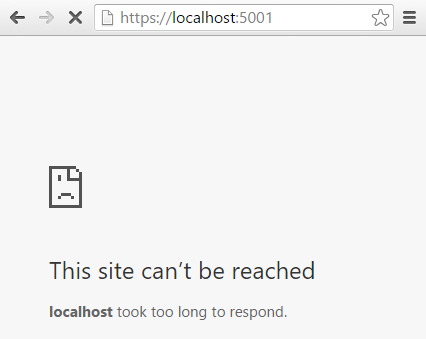

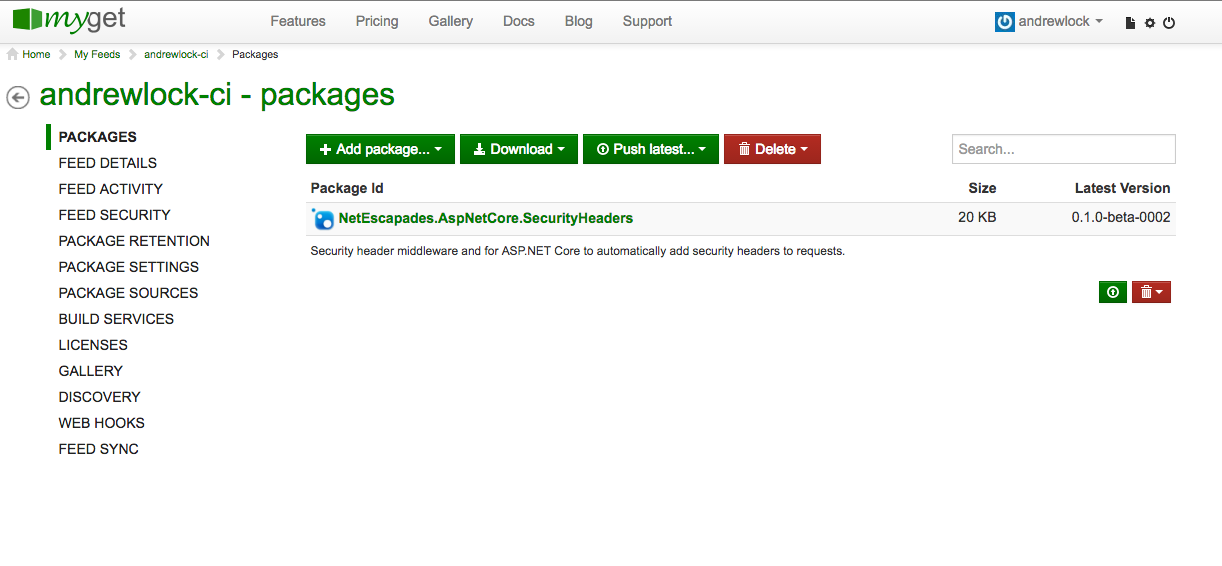

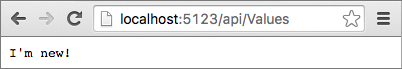

In a previous post I showed how you could use custom middleware to automatically add security headers to requests. This works well if you require a single policy to be applied to all requests. But what if you want certain actions to have a stricter policy wHere possible?

For example, it may be desirable to enable the Content-Security-Policy header to mitigate Cross Site Scripting (XSS) attacks, but there are pretty stringent requirements for it's use - you can't use inline javascript for example. With the previously described example, if you needed it disabled anywhere, you would have to disable it everywhere.

In order to make the security headers approach more flexible, I wanted to introduce the idea of multiple policies that could be applied to MVC controllers at the action or controller level, only falling back to the default policy when no others were specified.

The idea was that you could use an attribute [SecurityHeaderPolicy("policyName")] to indicate that a particular action or controller should use the specified "policyName" policy, which would be registered during configuration. The only problem is I wasn't sure how to connect all the parts.

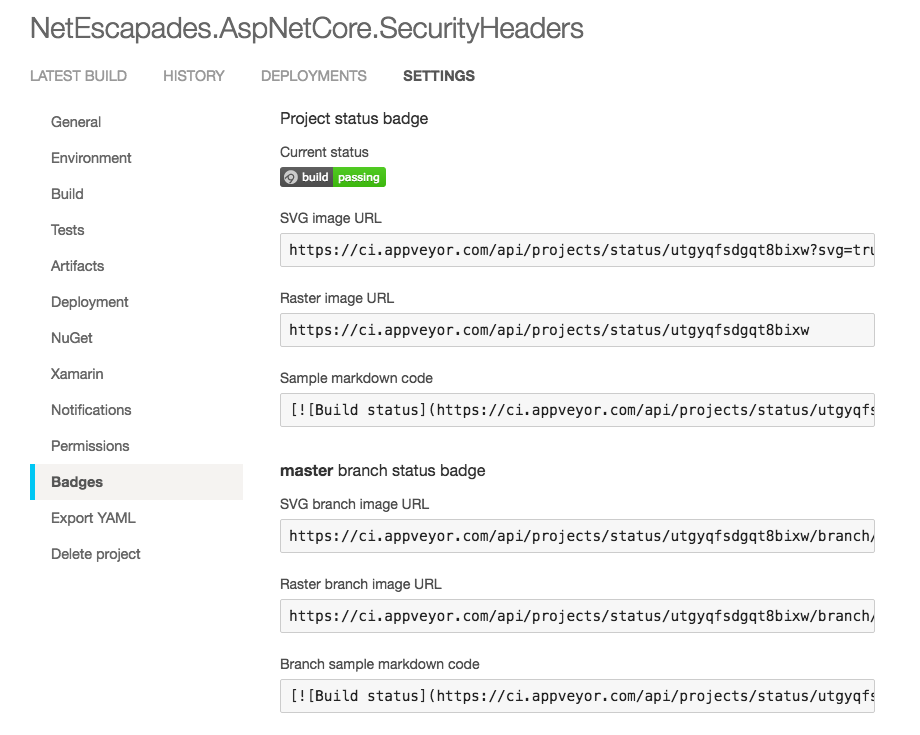

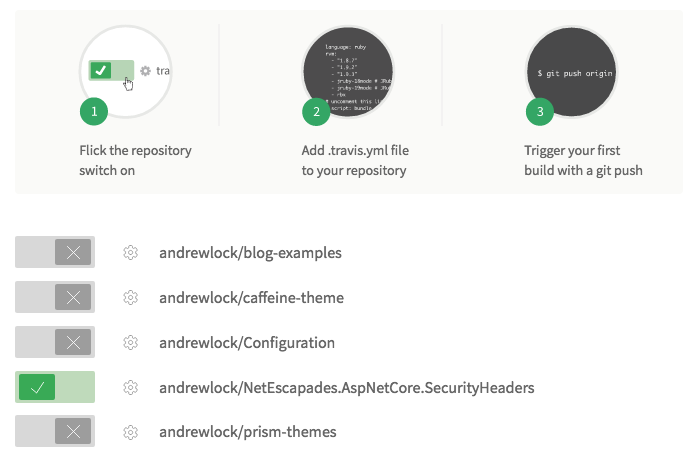

To get some inspiration I decided to explore the code behind the Cross-Origin Resource Sharing (CORS) library, which obviously, being part of ASP.NET Core, is open source on GitHub. The design of the ASP.NET Core CORS library is pretty much exactly what I have described, in which you can apply a policy at the middleware level , or register multiple policies and select one at runtime via MVC attributes.

In this post I wont be going in to detail about how CORS works itself, or how to enable it for your application. If that's what you're after then I suggest you checkout the documentation, which is excellent.

Instead I'm going to dive in to the code of the Microsoft.AspNetCore.Cors and Microsoft.AspNetCore.Mvc libraries themselves. I'll describe some of the patterns the ASP.NET Core team have used and the infrastructure required to configure a system as I have outlined.

Any code is pulled from the repos directly, but generally with comments and precondition checks removed.

Middleware or filters?

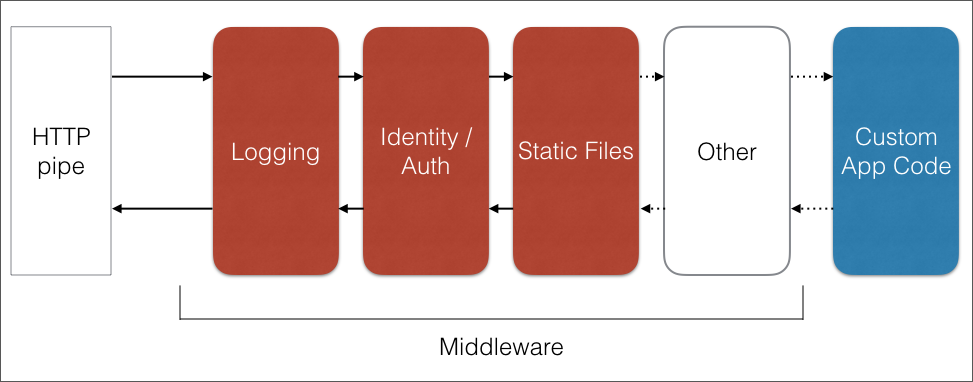

One of the key decisions made by the team is to split out the core CORS functionality (applying the appropriate headers etc) into a separate service, which is consumed in two distinct places - the middleware infrastructure and the MVC infrastructure.

Also, they have made the decision that the middleware implementation and the MVC attribute implementation work independently - you should only use one in your application otherwise you will get unexpected results.

Whichever approach you choose there is a lot of code in common. The mechanisms for building policies, registering them and applying them at runtime are common to both; it is only the method by which a particular policy is selected at runtime, and how the services are invoked which varies between the two methods.

Registering your policies

There are three main classes associated with the building and registering of policies: CorsOptions, CorsPolicy and CorsPolicyBuilder.

The CorsPolicy class is the heart of the library - it contains various properties that define the CORS mechanism, such as which headers, methods and origins are supported by a given resource.

public class CorsPolicy

{

public bool AllowAnyHeader { get { ... } }

public bool AllowAnyMethod { get { ... } }

public bool AllowAnyOrigin { get { ... } }

public IList<string> ExposedHeaders { get; } = new List<string>();

public IList<string> Headers { get; } = new List<string>();

public IList<string> Methods { get; } = new List<string>();

public IList<string> Origins { get; } = new List<string>();

public TimeSpan? PreflightMaxAge { get { ... } }

public bool SupportsCredentials { get; set; }

}

The CorsPolicyBuilder is a utility class to assist in building a CorsPolicy correctly, and contains methods such as AllowAnyOrigin(), and AllowCredentials()

The core of the CorsOptions class is a private IDictionary<string, CorsPolicy> which contains all the policies currently registered. There are utility methods for adding a CorsPolicy to the dictionary with a given key, and for retrieving them by key, but it is little more than a dictionary of all the policies that are registered.

public class CorsOptions

{

private IDictionary<string, CorsPolicy> PolicyMap { get; } = new Dictionary<string, CorsPolicy>();

public string DefaultPolicyName { get; set; }

public void AddPolicy(string name, CorsPolicy policy)

{

PolicyMap[name] = policy;

}

public void AddPolicy(string name, Action<CorsPolicyBuilder> configurePolicy)

{

var policyBuilder = new CorsPolicyBuilder();

configurePolicy(policyBuilder);

PolicyMap[name] = policyBuilder.Build();

}

public CorsPolicy GetPolicy(string name)

{

return PolicyMap.ContainsKey(name) ? PolicyMap[name] : null;

}

}

Finally, there is an extension method AddCors() on IServiceCollection which you can call when registering the required services which wraps a call to services.Configure<CorsOptions>(). This uses the options pattern to register the CorsOptions and ensures they are available for dependency injection later.

This mechanism for configuration seems pretty standard and allows quite a lot of flexibility for configuring multiple policies in code. However, the design of the CorsOptions object doesn't really lend itself to binding to a configuration data source, which would allow, for example, different policies defined by different environment variables.

Given the relatively complex requirements of implementing CORS correctly, this is probably intentional. It might have been a nice addition to allow, for example, binding the list of allowed origins from appsettings.json, but it's a minor point.

Finding and applying a policy

So your configuration is all complete, your policies are registered, now how are these policies found at runtime? Well, that varies depending on whether you are using the middleware or MVC attributes. With the middleware approach you have the option to directly pass in a CorsPolicyBuilder when the middleware is registered. If you do, then that will be used to build a CorsPolicy which will be injected in to the middleware constructor and used for every request. Alternatively, you can provide a policy name as a string, which will be injected into the middleware instead.

When using the MVC attributes, you also provide a policy name via an [EnableCors(policyName)] attribute at the global, controller or action level, which, after a relatively complex series of steps that I'll cover later, eventually spits out a policy name at the end of it.

This string "policyName" is used to obtain a CorsPolicy to apply by using the ICorsPolicyProvider interface:

using System.Threading.Tasks;

using Microsoft.AspNetCore.Http;

public interface ICorsPolicyProvider

{

Task<CorsPolicy> GetPolicyAsync(HttpContext context, string policyName);

}

This can be used to asynchronously retrieve a CorsPolicy given the current HttpContext and the policy name. The default implementation in the library (cunningly called DefaultCorsPolicyProvider), simply returns the appropriate policy from the provided options object, or null if no policy is found:

public class DefaultCorsPolicyProvider : ICorsPolicyProvider

{

private readonly CorsOptions _options;

public DefaultCorsPolicyProvider(IOptions<CorsOptions> options)

{

_options = options.Value;

}

public Task<CorsPolicy> GetPolicyAsync(HttpContext context, string policyName)

{

return Task.FromResult(_options.GetPolicy(policyName ?? _options.DefaultPolicyName));

}

}

If a policy is found, it can be applied using an instance of a service implementing ICorsService:

public interface ICorsService

{

CorsResult EvaluatePolicy(HttpContext context, CorsPolicy policy);

void ApplyResult(CorsResult result, HttpResponse response);

}

The method EvaluatePolicy returns an intermediate CorsResult that indicates the necessary action to take (which headers to set, whether to respond to an identified preflight request). Apply result is then used to apply these changes to the HttpResponse, nicely separating these two distinct actions.

Applying CORS via middleware

All of the classes described so far are used regardless of which method you choose to apply CORS. If you use the middleware, then the final connecting piece is the middleware itself, which is surprisingly simple. With the constructors removed, the middleware becomes:

public class CorsMiddleware

{

private readonly RequestDelegate _next;

private readonly ICorsService _corsService;

private readonly ICorsPolicyProvider _corsPolicyProvider;

private readonly CorsPolicy _policy;

private readonly string _corsPolicyName;

public async Task Invoke(HttpContext context)

{

if (context.Request.Headers.ContainsKey(CorsConstants.Origin))

{

var corsPolicy = _policy ?? await _corsPolicyProvider?.GetPolicyAsync(context, _corsPolicyName);

if (corsPolicy != null)

{

var corsResult = _corsService.EvaluatePolicy(context, corsPolicy);

_corsService.ApplyResult(corsResult, context.Response);

var accessControlRequestMethod =

context.Request.Headers[CorsConstants.AccessControlRequestMethod];

if (string.Equals(

context.Request.Method,

CorsConstants.PreflightHttpMethod,

StringComparison.Ordinal) &&

!StringValues.IsNullOrEmpty(accessControlRequestMethod))

{

// Since there is a policy which was identified,

// always respond to preflight requests.

context.Response.StatusCode = StatusCodes.Status204NoContent;

return;

}

}

}

await _next(context);

}

}

In the Invoke method, which is called when the middleware executes, first a policy is identified (either via a constructor injected policy or using the ICorsPolicyProvider). If a policy is found it is evaluated and applied using the ICorsService. Finally, if the request is identified as a preflight request and the policy allows it, a 204 status code is returned. Otherwise, the next piece of middleware is invoked. Simple!

Applying CORS via MVC attributes

While the middleware approach is simple, it only allows you to provide a single CORS policy for all requests that it processes. If you need more flexibility then there are a couple of approaches you can take.

If you are not using MVC, then implementing your own ICorsService and/or ICorsPolicyProvider may be your only option. The ICorsPolicyProvider is passed the HttpContext when looking for a policy, so it would be possible to implement this interface and return a particular policy based on something exposed there. Alternatively, the CorsService class is designed with extensibility in mind, with several methods exposed as public virtual to allow easy overriding in derived classes. This gives you a ton of flexibility, but obviously 'With Great Power…'.

If you are using MVC then the [EnableCors] and [DisableCors] attributes should provide you all the flexibility you need, allowing you to specify different policies, in a cascading fashion, at the global, controller and action level. It's very simple to consume, but under the hood there's a lot of moving parts!

Dumb marker attributes

First the attributes, [EnableCors] and [DisableCors]. These attributes are simple marker attributes only, with no functionality other than capturing a given policy name. They implement [IEnableCorsAttribute] and [IDisableCorsAttribute] but are used purely to provide metadata, not functionality:

public interface IDisableCorsAttribute { }

public class DisableCorsAttribute : Attribute, IDisableCorsAttribute { }

public interface IEnableCorsAttribute

{

string PolicyName { get; set; }

}

public class EnableCorsAttribute : Attribute, IEnableCorsAttribute

{

public EnableCorsAttribute(string policyName)

{

PolicyName = policyName;

}

public string PolicyName { get; set; }

}

Authorization filters doing all the hard work

So if the attributes themselves don't apply the CORS policy, where does the magic happen? Well the simple answer is in a different type of attribute - an ICorsAuthorizationFilter. There are just two attributes that implement this interface, the CorsAuthorizationFilter, and the DisableCorsAuthorizationFilter.

These attributes work very similarly to the CorsMiddleware shown previously. In the implemented IAuthorizationFilter.OnAuthorizationAsync method, the CorsAuthorizationFilter uses an injected ICorsPolicyProvider to find the appropriate CorsPolicy by name, then evaluates and applies it using the ICorsService.

public async Task OnAuthorizationAsync(Filters.AuthorizationFilterContext context)

{

// If this filter is not closest to the action, it is not applicable.

if (!IsClosestToAction(context.Filters))

{

return;

}

var httpContext = context.HttpContext;

var request = httpContext.Request;

if (request.Headers.ContainsKey(CorsConstants.Origin))

{

var policy = await _corsPolicyProvider.GetPolicyAsync(httpContext, PolicyName);

if (policy == null)

{

throw new InvalidOperationException(

Resources.FormatCorsAuthorizationFilter_MissingCorsPolicy(PolicyName));

}

var result = _corsService.EvaluatePolicy(context.HttpContext, policy);

_corsService.ApplyResult(result, context.HttpContext.Response);

var accessControlRequestMethod =

httpContext.Request.Headers[CorsConstants.AccessControlRequestMethod];

if (string.Equals(

request.Method,

CorsConstants.PreflightHttpMethod,

StringComparison.Ordinal) &&

!StringValues.IsNullOrEmpty(accessControlRequestMethod))

{

// If this was a preflight, there is no need to run anything else.

// Also the response is always 200 so that anyone after mvc can handle the pre flight request.

context.Result = new StatusCodeResult(StatusCodes.Status200OK);

}

// Continue with other filters and action.

}

}

The main difference between the CorsAuthorizationFilter and the middleware is the additional call at the start of the method to IsClosestToAction, which bypasses the filter if there is a more specific ICorsAuthorizationFilter closer to the final action called. This allows the ability to have a Globally applied filter, which is overriden by a controller level filter, which in turn is overriden by a filter applied to an action.

The DisableCorsAuthorizationFilter is effectively a stripped down version of the CorsAuthorizationFilter - it does not look for or apply a policy, and so does not add the CORS headers, but if it detects a preflight request it returns the 200 status code.

Creating attributes that have constructor dependencies

Phew! That was hard work, so we have our marker attributes to indicate which policy we want applied (or disabled), and we have our ICorsAuthorizationFilter actually applying the CORS, but how are the two connected? It's not like we decorate our controllers and actions with CorsAuthorizationFilter attributes. And why don't we just directly use those attributes?

The simple answer is we need an ICorsService and ICorsPolicyProvider in order to execute the CORS request. These are transient services which we need injected from the IoC container. Unfortunately in order for a filter to be applied to an action it has to have a parameterless constructor. That leaves you trying to use the Service Locator anti-pattern or setter injection or similar, none of which are particularly appealing.

So how are the filters created? The answer to this is through the use of two more interfaces - IFilterFactory and IApplicationModelProvider.

IFilterFactory

Unsurprisingly, the IFilterFactory interface does exactly what it says on the tin - it creates an IFilterMetadata given an IServiceProvider:

public interface IFilterFactory : IFilterMetadata

{

bool IsReusable { get; }

IFilterMetadata CreateInstance(IServiceProvider serviceProvider);

}

Although very simple, this interface very nicely allows you to inject additional dependencies where it wouldn't otherwise be possible. The IServiceProvider is essentially the IoC container that you can query to retrieve services. The CorsAuthorizationFilterFactory (elided) looks like this:

public class CorsAuthorizationFilterFactory : IFilterFactory, IOrderedFilter

{

private readonly string _policyName;

public CorsAuthorizationFilterFactory(string policyName)

{

_policyName = policyName;

}

public int Order { get { return int.MinValue + 100; } }

public bool IsReusable => true;

public IFilterMetadata CreateInstance(IServiceProvider serviceProvider)

{

var filter = serviceProvider.GetRequiredService<CorsAuthorizationFilter>();

filter.PolicyName = _policyName;

return filter;

}

}

When CreateInstance is called, the factory fetches a CorsAuthorizationFilter directly from the container - the ICorsService and ICorsPolicyProvider are automatically wired up using dependency injection internally, and the fully constructed filter is returned. All that remains is to set the policy name on the filter.

Another interesting point to note about IFilterFactory is that as well as allowing you to create an instance of a IFilterMetadata, it is itself an instance of IFilterMetadata. Under the hood, the ASP.NET Core MVC pipeline can use these filters interchangeably, and just attempts to cast to IFilterFactory before using a filter.

Although it's not used as part of the CORS functionality, that ability means you can create attributes which implement IFilterFactory and directly decorate your controllers with them, using them as a proxy to decorating with an attribute that requires dependency injection.

Some of the interfaces have changed a little but this blog post at StrathWeb has a great explanation of this, and shows how to use the [ServiceFilter] attribute to create a logging filter using [ServiceFilter(typeof(LogFilter))], I recommend checking it out!

The last piece of the puzzle.

Hopefully that all makes sense but you might still be scratching your head a little - we aren't decorating our methods with CorsAuthorizationFilterFactory either! How do we get from EnableCors to here?

The answer, is by customising the ApplicationModel using an IApplicationModelProvider.

Wait, what?

This post is already way too long so I won't go in to too much detail but essentially the application model is a representation of all the components - the controllers, actions and filters - that the MVC pipeline knows about. This is constructed through a number of IApplicationModelProviders.

The CORS implementation uses the CorsApplicationModelProvider to supplement the marker [EnableCors] and [DisableCors] attributes with instances of the CorsAuthorizationFilterFactory and the DisableCorsAuthorizationFilter. It loops through each controller and each action or each controller in the call to OnProvidersExecuting and adds the appropriate filter:

public void OnProvidersExecuting(ApplicationModelProviderContext context)

{

IEnableCorsAttribute enableCors;

IDisableCorsAttribute disableCors;

foreach (var controllerModel in context.Result.Controllers)

{

enableCors = controllerModel.Attributes.OfType<IEnableCorsAttribute>().FirstOrDefault();

if (enableCors != null)

{

controllerModel.Filters.Add(new CorsAuthorizationFilterFactory(enableCors.PolicyName));

}

disableCors = controllerModel.Attributes.OfType<IDisableCorsAttribute>().FirstOrDefault();

if (disableCors != null)

{

controllerModel.Filters.Add(new DisableCorsAuthorizationFilter());

}

foreach (var actionModel in controllerModel.Actions)

{

enableCors = actionModel.Attributes.OfType<IEnableCorsAttribute>().FirstOrDefault();

if (enableCors != null)

{

actionModel.Filters.Add(new CorsAuthorizationFilterFactory(enableCors.PolicyName));

}

disableCors = actionModel.Attributes.OfType<IDisableCorsAttribute>().FirstOrDefault();

if (disableCors != null)

{

actionModel.Filters.Add(new DisableCorsAuthorizationFilter());

}

}

}

}

All that remains is for these additional classes to be hooked in to the dependency injection as part of a call to AddMvc in the ConfigureServices method of your ASP.NET app's Startup class and all is good. This is done automatically internally when you configure Mvc for Cors using an extension method on IMvcCoreBuilder, which eventually calls the following method:

internal static void AddCorsServices(IServiceCollection services)

{

services.AddCors();

services.TryAddEnumerable(

ServiceDescriptor.Transient<IApplicationModelProvider, CorsApplicationModelProvider>());

services.TryAddTransient<CorsAuthorizationFilter, CorsAuthorizationFilter>();

}

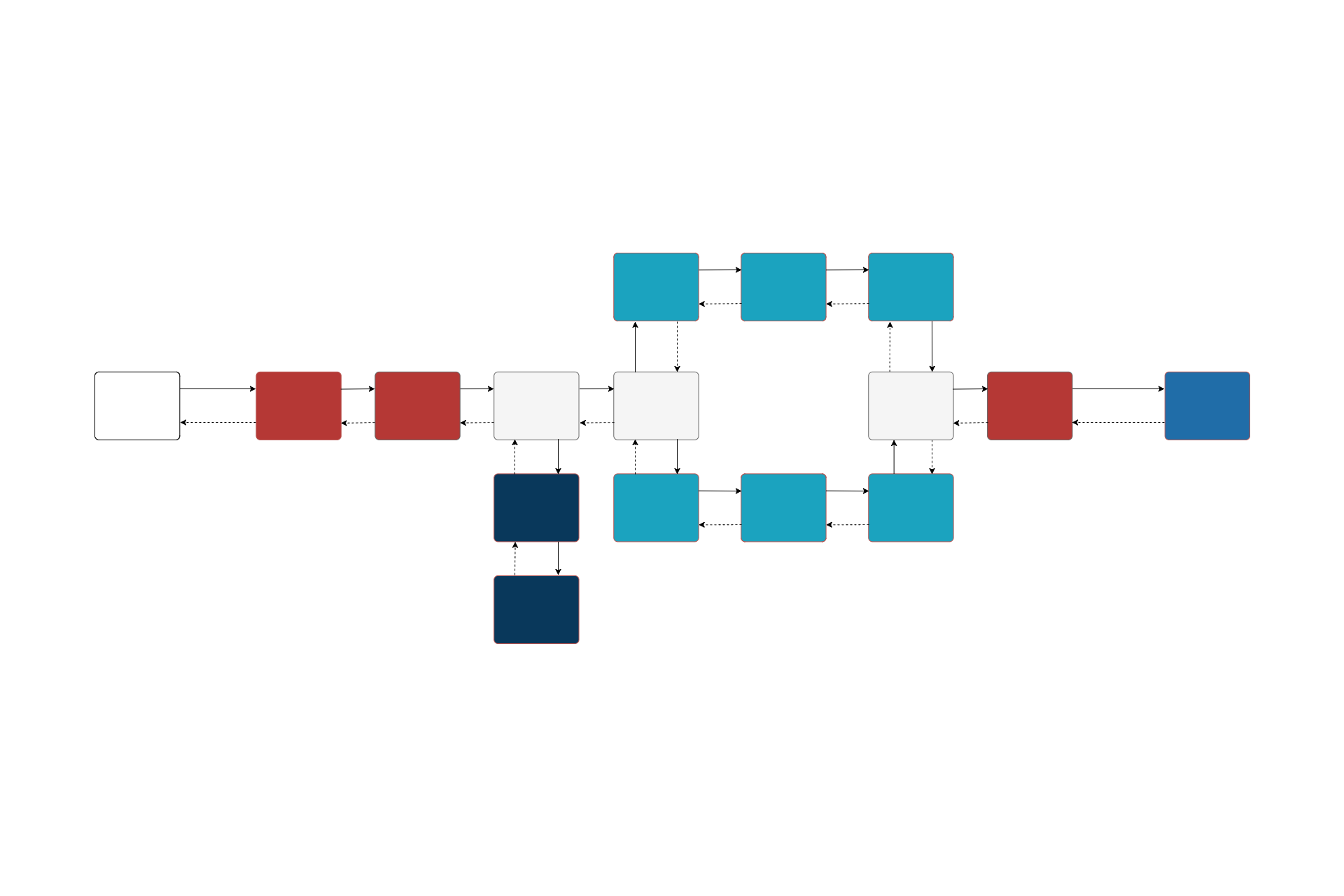

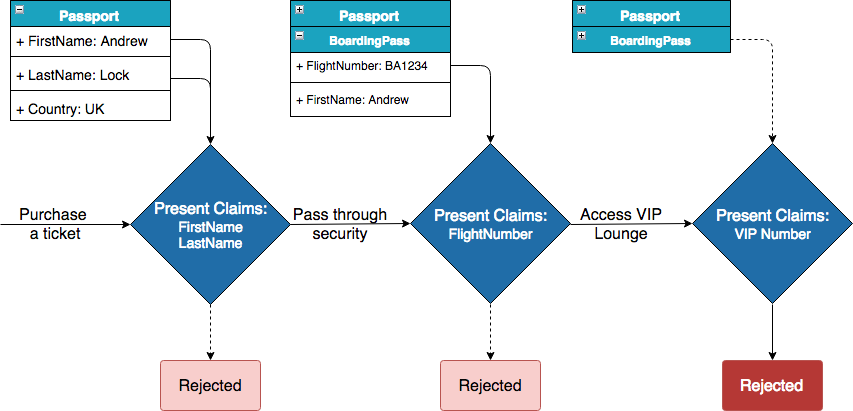

Summary

Phew! If you made it this far, colour me impressed. It definitely ended up being a more meaty post than I had originally intended, mostly because the MVC implementation has more parts to it than I appreciated! As a recap there are three main sections, the CORS services, the middleware and the MVC implementation:

Services:

- Policies are registered using the

CorsOptions and CorsPolicyBuilder classes.

- Policies are located and applied at runtime using the

ICorsPolicyProvider and ICorsService.

Middleware:

- The

CorsMiddleware, if configured, applies a single CORS policy object to all requests it handles.

MVC:

- Marker attributes

[EnableCors] and [DisableCors] are applied to controllers, actions, or globally.

- At runtime, a

CorsApplicationModelProvider locates these attributes and replaces them with CorsAuthorizationFilterFactory and DisableCorsAuthorizationFilter respectively.

- The

CorsAuthorizationFilterFactory is used to create a fully service-injected CorsAuthorizationFilter.

- These authorization filters run early in the normal MVC pipeline (to intercept preflight requests) and apply the appropriate CORS policy (or not in the case of the Disable attribute) in the same way as the middleware.

References