In this post I describe a pattern that lets you run arbitrary commands for your application in your Kubernetes cluster, by having a pod available for you to exec into. You can use this pod to perform ad-hoc maintenance, administration, queries—all those tasks that you can't easily schedule, because you don't know when you'll need to run them, or because that just doesn't make sense.

I'll describe the issue and the sort of tasks I'm thinking about, and discuss while this becomes tricky when you run your applications in Kubernetes. I'll then show the approach I use to make this possible: a long-running deployment of a pod containing a CLI tool that allows running the commands.

Background: running ad-hoc queries and tasks

One of the tenants of DevOps and general declarative approaches to software deployment, is that you try to automate as much as possible. You don't want to have to run database migrations manually as part of a deploy, or to have to remember to perform a specific sequence of operations when deploying your code. That should all be automated: ideally a deployment should, at most, require clicking a "deploy now" button.

Unfortunately, while we can certainly strive for that, we can't always achieve it. Bugs happen and issues arise that sometimes require some degree of manual intervention. Maybe a cache gets out of sync somehow and needs to be cleared. Perhaps a bug prevented some data being indexed in your ElasticSearch cluster, and you need to "manually" index it. Or maybe you want to test some backend functionality, without worrying about the UI.

If you know these tasks are going to be necessary, then you should absolutely try and run them automatically when they're going to be needed. For example, if you update your application to index more data in ElasticSearch, then you should automatically do that re-indexing when your application deploys.

We run these tasks as part of the "migrations" job I described in previous posts. Migrations don't just have to be database migrations!

If you don't know that the tasks are going to be necessary, then having a simple method to run the tasks is very useful. One option is to have an "admin" screen in your application somewhere that lets you simply and easily run the tasks.

There's pros and cons to this approach. On the plus side, it provides an easy mechanism for running the tasks, and uses the same authentication and authorization mechanisms built into your application. The downside is that you're exposing various potentially destructive operations via an endpoint, which may require more privileges than the rest of your application. There's also the maintenance overhead of exposing and wiring up those tasks in the UI.

An alternative approach is the classic "system administrator" approach: a command line tool that can run the administrative tasks. The problem with this in the Kubernetes setting is where do you run the task? The tool likely needs access to the same resources as your production application, so unless you want severe headaches trying to duplicate configuration and access secrets from multiple places, you really need to run the tasks from inside the cluster.

Our solution: a long running deployment of a CLI tool

In a previous post, I mentioned that I like to create a "CLI" application for each of my main applications. This tool is used to run database migrations, but it also allows you to run any other administrative commands you might need.

The overall solution we've settled on is to create a special "CLI exec host" pod in a deployment, as part of your application release. This pod contains our application's CLI tool for running various administration commands. The pod's job is just to sit there, doing nothing, until we need to run a command.

When we need to run a command, we exec into the container, and run the command.

Kubernetes allows you to open a shell in a running container by using exec (short for executing a command). If you have kubectl configured, you can do this from the command line using something like

kubectl exec --stdin --tty test-app-cli-host -- /bin/bash

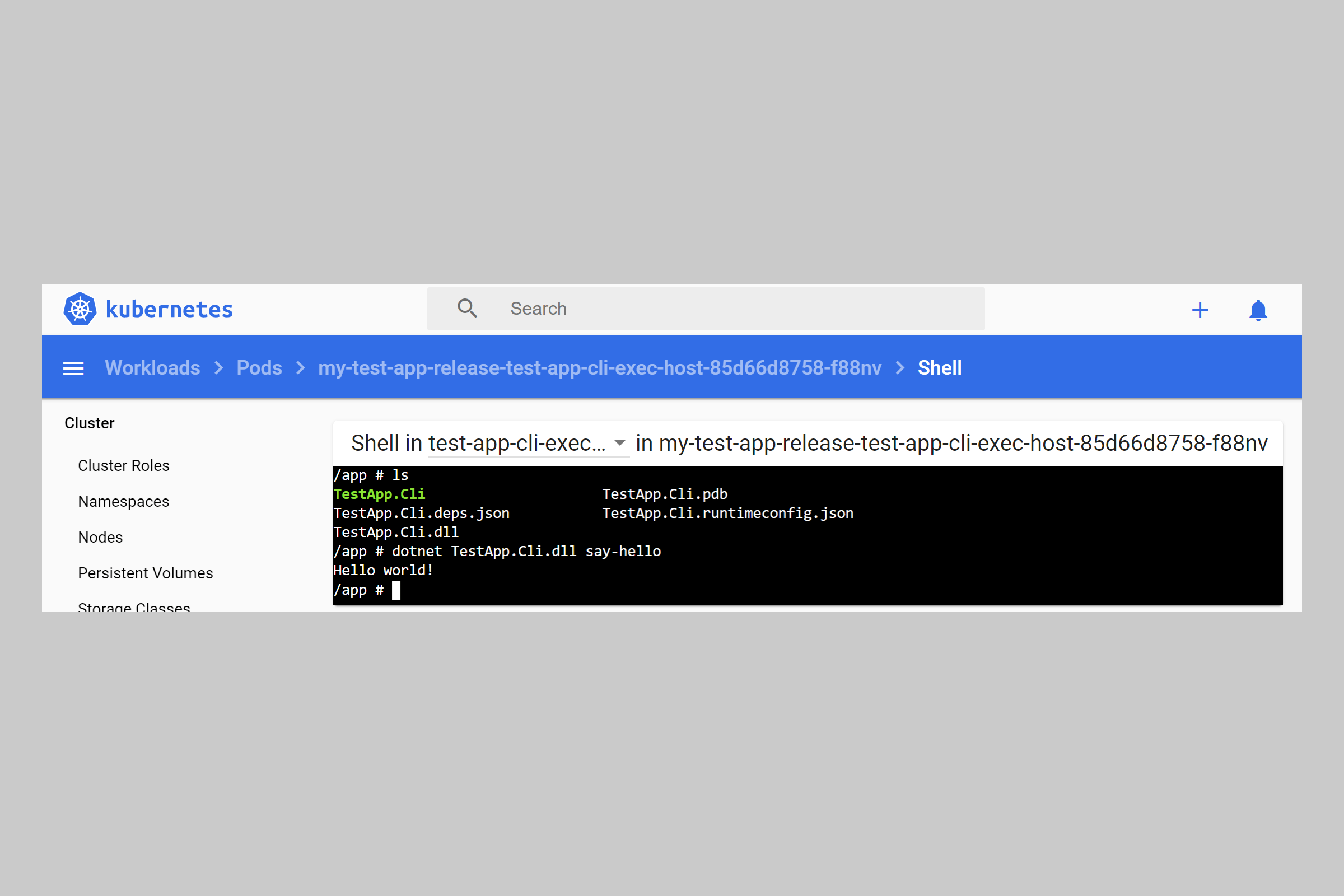

Personally, I prefer to exec into a container using the Kubernetes dashboard. You can exec into any running container by selecting the pod and clicking the exec symbol:

This gives you a command prompt with the container's shell (which may be the bash shell) or the ash shell for example). From here you can run any commands you like. In the example above I ran the ls command.

Be aware, if you

execinto one of your "application" pods, then you could impact your running applications. Obviously that could be Bad™.

At this point you have a shell, in a pod in your Kubernetes cluster so you can run any administrative commands you need to. Obviously you need to be aware of the security implications here—depending on how locked down your cluster is, this may not be something you can or want to do, but it's worked well enough for us!

Creating the CLI exec-host container

We want to deploy the CLI tool inside the exec-host pod as part of our application's standard deployment, so we'll need a Docker container and a Helm chart for it. As in my previous posts, I'll assume that you have already created a .NET Core command-line tool for running commands. In this section I show the Dockerfile I use and the Helm chart for deploying it.

The tricky part in setting this up is that we want to have a container that does nothing, but isn't killed. We don't want Kubernetes to run our CLI tool—we want to do that manually ourselves when we exec into the container, so we can choose the right command etc. But the container has to run something otherwise it will exit, and we won't have anything to exec into. To achieve that, I use a simple bash script.

The keep_alive.sh script

The following script is based on a StackOverflow answer (shocker, I know). It looks a bit complicated, but this script essentially just sleeps for 86,400 seconds (1 day). The extra code ensures there's no delay when Kubernetes tries to kill the pod (for example when we're upgrading a chart) See the StackOverflow answer for a more detailed explanation.

#!/bin/sh

die_func() {

echo "Terminating"

exit 1

}

trap die_func TERM

echo "Sleeping..."

# restarts once a day

sleep 86400 &

wait

We'll use this script to keep a pod alive in our cluster so that we can exec into it, while using very few resources (typically couple of MB of memory and 0 CPU!).

The CLi exec-host Dockerfile

For the most part, the Dockerfile for the CLI tool is a standard .NET Core application. The interesting part is the runtime container, so I've used a very basic builder Dockerfile that just does everything in one step.

Don't copy the builder part of this Dockerfile (everything before the

###), instead use an approach that uses layer caching.

# Build standard .NET Core application

FROM mcr.microsoft.com/dotnet/core/sdk:3.1 AS builder

WORKDIR /app

# WARNING: This is completely unoptimised!

COPY . .

# Publish the CLI project to the path /app/output/cli

RUN dotnet publish ./src/TestApp.Cli -c Release -o /app/output/cli

###################

# Runtime image

FROM mcr.microsoft.com/dotnet/core/aspnet:3.1-alpine

# Copy the background script that keeps the pod alive

WORKDIR /background

COPY ./keep_alive.sh ./keep_alive.sh

# Ensure the file is executable

RUN chmod +x /background/keep_alive.sh

# Set the command that runs when the pod is started

CMD "/background/keep_alive.sh"

WORKDIR /app

# Copy the CLI tool into this container

COPY --from=builder ./app/output/cli .

This Dockerfile does a few things

- Builds the CLI project in a completely unoptimised way.

- Uses the ASP.NET Core runtime image as the base deployment container. If your CLI tool doesn't need the ASP.NET Core runtime, you could use the base .NET Core runtime image instead

- Copies the keep_alive.sh script from the previous section into the background folder.

- Sets the container

CMDto run the keep_alive.sh script. When the container is run, the script will be executed. - Change the working directory to

/appand copy the CLI tool into the container.

We'll add this Dockerfile to our build process, and tag it as andrewlock/my-test-cli-exec-host. Now we have a Docker image, we need to create a chart to deploy the tool with our main application.

Creating a chart for the cli-exec-host

The only thing we need for our exec-host Chart is a deployment.yaml to create a deployment. We don't need a service (other apps shouldn't be able to call the pod) and we don't need an ingress (we're not exposing any ports externally to the cluster). All we need to do is ensure that a pod is available if we need it.

The deployment.yaml shown below is based on the default template created when you call helm create test-app-cli-exec-host. We don't need any readiness/liveness probes, as we're just using the keep_alive.sh script to keep the pod running, so I removed that section. I added an additional section for injecting environment variables, as we will want our CLI tool to have the same configuration as our other applications/.

Don't worry about the details of this YAML too much. There's a lot of boilerplate in there and a lot of features we haven't touched on that will go unused unless you explicitly configure them. I only decided to show the whole chart for completeness

apiVersion: apps/v1

kind: Deployment

metadata:

name: {{ include "test-app-cli-exec-host.fullname" . }}

labels:

{{- include "test-app-cli-exec-host.labels" . | nindent 4 }}

spec:

replicas: 1

selector:

matchLabels:

{{- include "test-app-cli-exec-host.selectorLabels" . | nindent 6 }}

template:

metadata:

{{- with .Values.podAnnotations }}

annotations:

{{- toYaml . | nindent 8 }}

{{- end }}

labels:

{{- include "test-app-cli-exec-host.selectorLabels" . | nindent 8 }}

spec:

{{- with .Values.imagePullSecrets }}

imagePullSecrets:

{{- toYaml . | nindent 8 }}

{{- end }}

serviceAccountName: {{ include "test-app-cli-exec-host.serviceAccountName" . }}

securityContext:

{{- toYaml .Values.podSecurityContext | nindent 8 }}

containers:

- name: {{ .Chart.Name }}

securityContext:

{{- toYaml .Values.securityContext | nindent 12 }}

image: "{{ .Values.image.repository }}:{{ .Values.image.tag | default .Chart.AppVersion }}"

imagePullPolicy: {{ .Values.image.pullPolicy }}

env:

{{- $env := merge (.Values.env | default dict) (.Values.global.env | default dict) -}}

{{ range $k, $v := $env }}

- name: {{ $k | quote }}

value: {{ $v | quote }}

{{- end }}

resources:

{{- toYaml .Values.resources | nindent 12 }}

{{- with .Values.nodeSelector }}

nodeSelector:

{{- toYaml . | nindent 8 }}

{{- end }}

{{- with .Values.affinity }}

affinity:

{{- toYaml . | nindent 8 }}

{{- end }}

{{- with .Values.tolerations }}

tolerations:

{{- toYaml . | nindent 8 }}

{{- end }}

We'll need to add a section to the top-level chart's Values.yaml to define the Docker image to use, and optionally override any other settings:

test-app-cli-exec-host:

image:

repository: andrewlock/my-test-cli-exec-host

pullPolicy: IfNotPresent

tag: ""

serviceAccount:

create: false

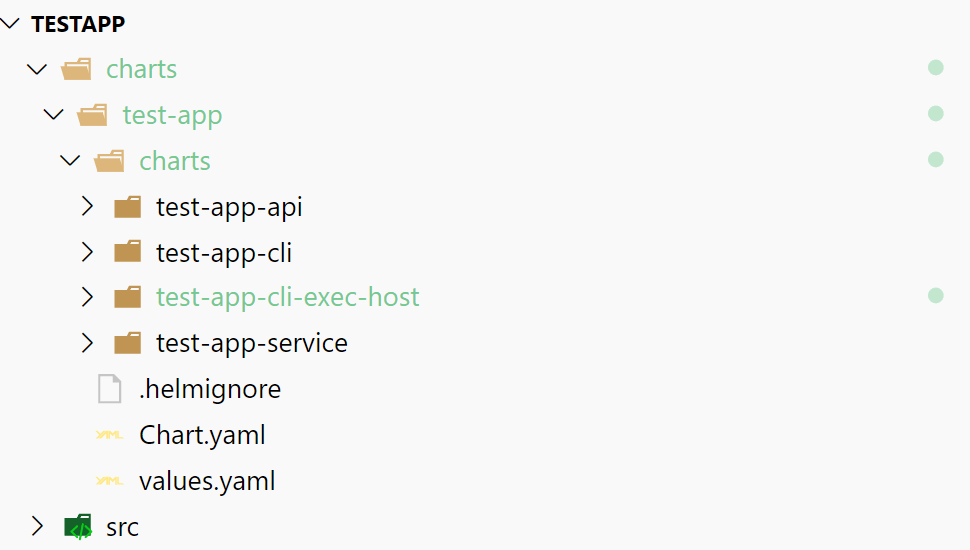

Our overall Helm chart has now grown to 4 sub-charts: The two "main" applications (the API and message handler service), the CLI job for running database migrations automatically, and the CLI exec-host chart for running ad-hoc commands:

All that's left to do is to take our exec-host chart for a spin!

Testing it out

We can install the chart using a command like the following:

helm upgrade --install my-test-app-release . \

--namespace=local \

--set test-app-cli.image.tag="0.1.1" \

--set test-app-cli-exec-host.image.tag="0.1.1" \

--set test-app-api.image.tag="0.1.1" \

--set test-app-service.image.tag="0.1.1" \

--debug

After installing the chart, you should see the exec-host deployment and pod in your cluster, sat there happily doing nothing:

We can now exec into the container. You could use kubectl if you're command-line-inclined, but I prefer to use the dashboard to click exec to get a shell. I'm normally only trying to run a command or two, so it's good enough!

As you can see in the image below, we have access to our CLI tool from here and can run our ad-hoc commands using, for example, dotnet TestApp.Cli.dll say-hello:

Ignore the error at the top of the shell. I think that's because Kubernetes tries to open a Bash shell specifically, but as this is an Alpine container, it uses the Ash shell instead.

And with that, we can now run ad-hoc commands in the context of our cluster whenever we need to. Obviously we don't want to make a habit of that, but having the option is always useful!

Summary

In this post I showed how to create a CLI exec-host to run ad-hoc commands in your Kubernetes cluster by creating a deployment of a pod that contains a CLI tool. The pod contains a script that keeps the container running without using any resources. You can then exec into the pod, and run any necessary commands.