In the previous post in this series, I showed how I prefer to run database migrations when deploying ASP.NET Core applications to Kubernetes. One of the downsides of the approach I described, in which the migrations are run using a Job, is that it makes it difficult to know exactly when a deployment has succeeded or failed.

In this post I describe the problem and the approach I take to monitor the status of a release.

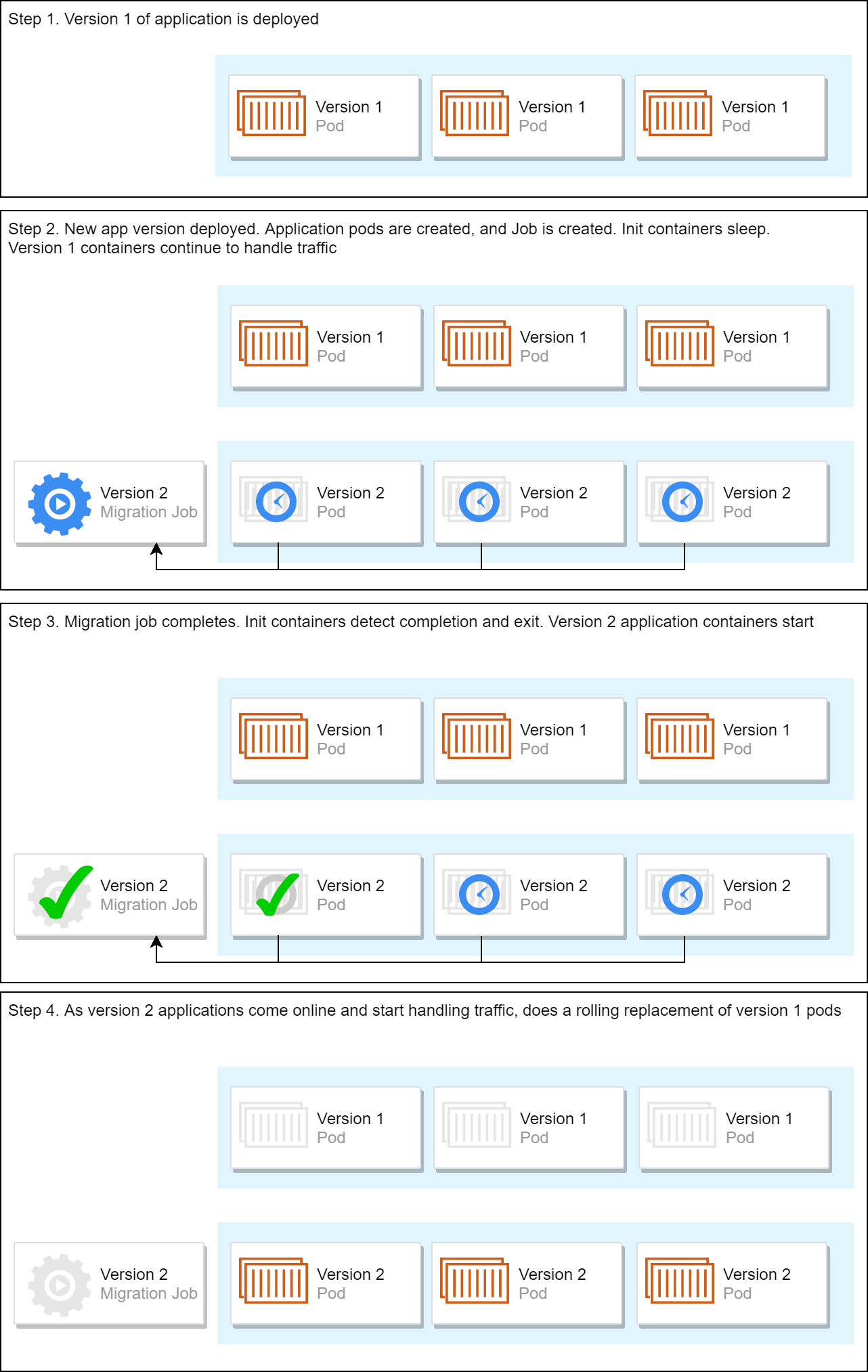

A quick recap: the database migration solution with Jobs and init containers

I've discussed our overall database migration approach in my last two posts, first in general, and then in more detail.

The overall approach can be summarised as:

- Execute the database migration using a Kubernetes job that is part of the application's Helm chart, and is installed with the rest of the application deployments.

- Add an init container to each "main" application pod, which monitors the status of the job, and only completes once the job has completed successfully.

The following chart shows the overall deployment process—for more details, see my previous post.

The only problem with this approach is working out when the deployment is "complete". What does that even mean in this case?

When is a Helm chart release complete?

With the solution outlined above and in my previous posts, you can install your Helm chart using a command something like:

helm upgrade --install my-test-app-release . \

--namespace=local \

--set test-app-cli.image.tag="1.0.0" \

--set test-app-api.image.tag="1.0.0" \

--set test-app-service.image.tag="1.0.0" \

--debug

The question is, how do you know if the release has succeeded? Did your database migrations complete successfully? Have your init containers unblocked your application pods, and allowed the new pods to start handling traffic?

Until all those steps have taken place, your release hasn't really finished yet. If the migrations fail, the init containers will never unblock, and your new application pods will never be started. You won't get downtime if you're using Kubernetes' rolling-update strategy (the default), but your new application won't be deployed either!

Initially, it looks like Helm should have you covered. The helm status command lets you view the status of a release. That's what we want right? Unfortunately not. This is the output I get from running helm status my-test-app-release shortly after doing an update.

> helm status my-test-app-release

LAST DEPLOYED: Fri Oct 9 11:06:44 2020

NAMESPACE: local

STATUS: DEPLOYED

RESOURCES:

==> v1/Service

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

my-test-app-release-test-app-api ClusterIP 10.102.15.166 <none> 80/TCP 47d

my-test-app-release-test-app-service ClusterIP 10.104.28.71 <none> 80/TCP 47d

==> v1/Deployment

NAME DESIRED CURRENT UP-TO-DATE AVAILABLE AGE

my-test-app-release-test-app-api 1 2 1 0 47d

my-test-app-release-test-app-service 1 2 1 0 47d

==> v1/Job

NAME DESIRED SUCCESSFUL AGE

my-test-app-release-test-app-cli-9 1 0 15s

==> v1beta1/Ingress

NAME HOSTS ADDRESS PORTS AGE

my-test-app-release-test-app-api chart-example.local 80 47d

==> v1/Pod(related)

NAME READY STATUS RESTARTS AGE

my-test-app-release-test-app-api-7ddd69c8cd-2dwv5 1/1 Running 0 23m

my-test-app-release-test-app-service-5859745bbc-9f26j 1/1 Running 0 23m

my-test-app-release-test-app-api-68cc5d7ff-wkpmb 0/1 Init:0/1 0 15s

my-test-app-release-test-app-service-6fc875454b-b7ml9 0/1 Init:0/1 0 15s

my-test-app-release-test-app-cli-9-7gvqq 1/1 Running 0 15s

There's quite a lot we can glean from this view:

- Helm tells us says the latest release was deployed on 9th October into the

localnamespace, and has a status ofDEPLOYED. - The Service, Deployment, and Ingress resources were all created 47 days ago. That's when I first installed them in the cluster.

- The latest Job,

my-test-app-release-test-app-cli-9has 0 pods in theSuccessfulstatus. That's not surprising, as we can see the associated Pod,my-test-app-release-test-app-cli-9-7gvqqis still running the migrations - One API pod and one service pod are running. These are actually the pods from the previous deployment, which are ensuring our zero downtime release.

- The other API and service pods are sat in the

Init:0/1status. That means they are waiting for their init container to exit (each pod has 1 init container, and 0 init containers have completed).

The problem is that as far as Helm is concerned, the release has completed successfully. Helm doesn't know about our "delayed startup" approach. All Helm knows is it had resources to install, and they were installed. Helm is done.

To be fair, Helm does have approaches that I think would improve this, namely Chart Hooks and Chart Tests. Plus, theoretically,

helm install --waitshould work here I think. Unfortunately I've always run into issues with all of these. It's quite possible I'm not using them correctly, so if you have a solution that works, let me know in the comments!

So where does that leave us? Unfortunately, the only approach I've found that works is a somewhat hacky bash script to poll the statuses…

Waiting for releases to finish by polling

So as you've seen, Helm sort of lies about the status of our release, as it says DEPLOYED before our migration pod has succeeded and our application pods are handling requests. However, helm status does give us a hint of how to determine when a release is out: watch the status of the pods.

The following script is based on this hefty post from a few years ago which attempts to achieve exactly what we're talking about—waiting for a Helm release to be complete.

I'll introduce the script below in pieces, and discuss what's it doing first. If you just want to see the final script you can jump to the end of the post.

As this series is focused on Linux containers in Kubernetes, the script below uses Bash. I don't have a PowerShell version of it, but hopefully you can piece one together from my descriptions if that's what you need!

Prerequisites

This script assumes that you have the following:

- The Kubernetes

kubectltool installed, configured to point to your Kubernetes cluster. - The Helm command line tool installed.

- A chart to install. Typically this would be pushed to a chart repository, but as for the rest of this series, I'm assuming it's unpacked locally.

- Docker images for the apps in your helm chart, tagged with a version

DOCKER_TAG, and pushed to a Docker repository accessible by your Kubernetes cluster.

As long as you have all those, you can use the following script.

Installing the Helm chart

The first part of the script sets some variables based on environment variables passed when invoking the script. It then installs the chart CHART into the namespace NAMESPACE, giving it the name RELEASE_NAME, and applying the provided additional arguments HELM_ARGS. You would call the script something like the following:

CHART="my-chart-repo/test-app"

RELEASE_NAME="my-test-app-release" \

NAMESPACE="local" \

HELM_ARGS="--set test-app-cli.image.tag=1.0.0 \

--set test-app-api.image.tag=1.0.0 \

--set test-app-service.image.tag=1.0.0 \

" \

./deploy_and_wait.sh

This sets environment variables for the process and calls the ./deploy_and_wait.sh script.

The first part of the script checks that the required variables have been provided, fetches the details of your Kubernetes cluster, and then performs the Helm chart install:

#!/bin/bash

set -euo pipefail

# Required Variables:

[ -z "$CHART" ] && echo "Need to set CHART" && exit 1;

[ -z "$RELEASE_NAME" ] && echo "Need to set RELEASE_NAME" && exit 1;

[ -z "$NAMESPACE" ] && echo "Need to set NAMESPACE" && exit 1;

# Set the helm context to the same as the kubectl context

KUBE_CONTEXT=$(kubectl config current-context)

# Install/upgrade the chart

helm upgrade --install \

$RELEASE_NAME \

$CHART \

--kube-context "${KUBE_CONTEXT}" \

--namespace="$NAMESPACE" \

$HELM_ARGS

Now we've installed the chart, we need to watch for the release to complete (or fail)

Waiting for the release to be deployed

The first thing we need to do is wait for the release to be complete as far as Helm is concerned. If you remember back to earlier, Helm marked our release as DEPLOYED very quickly, but depending on your Helm chart (if you're using Helm hooks for something for example) and your cluster, that may take a little longer.

The next part of the script watches Helm by polling helm for a list of releases using helm ls -q and searches for our RELEASE_NAME using grep. We're just waiting for the release to appear in the list here, so that should happen very quickly. If it doesn't appear, something definitely went wrong, so we abandon the script with a non-zero exit

echo 'LOG: Watching for successful release...'

# Timeout after 6 repeats = 60 seconds

release_timeout=6

counter=0

# Loop while $counter < $release_timeout

while [ $counter -lt $release_timeout ]; do

# Fetch a list of release names

releases="$(helm ls -q --kube-context "${KUBE_CONTEXT}")"

# Check if $releases contains RELEASE_NAME

if ! echo "${releases}" | grep -qF "${RELEASE_NAME}"; then

echo "${releases}"

echo "LOG: ${RELEASE_NAME} not found. ${counter}/${release_timeout} checks completed; retrying."

# NOTE: The pre-increment usage. This makes the arithmatic expression

# always exit 0. The post-increment form exits non-zero when counter

# is zero. More information here: http://wiki.bash-hackers.org/syntax/arith_expr#arithmetic_expressions_and_return_codes

((++counter))

sleep 10

else

# Our release is there, we can stop checking

break

fi

done

if [ $counter -eq $release_timeout ]; then

echo "LOG: ${RELEASE_NAME} failed to appear." 1>&2

exit 1

fi

Now we know that we have a release, we can check the status of the pods. There's two statuses we're looking for Running and Succeeded:

Succeededmeans the pod exited successfully, which is what we want for the pods that make up our migration job.Runningmeans the pod is currently running. This is the final status we want for our application pods, once the release is complete.

Our migration job pod will be Running initially, before the release is complete, but we know that our application pods won't start running until the job succeeds. Therefore we need to wait for all the release pods to be in either the Running or Succeeded state. Additionally, if we see Failed, we know the release failed.

In this part of the script we use kubectl get pods to fetch all the pods which have a label called app.kubernetes.io/instance set to the RELEASE_NAME. This label is added to your pods by Helm by default if you use the templates created by helm init as I described in a previous post.

The output of this command is assigned to release_pods, and will look something like:

my-test-app-release-test-app-api-68cc5d7ff-wkpmb Running

my-test-app-release-test-app-api-9dc77f7bc-7n596 Pending

my-test-app-release-test-app-cli-11-6rlsl Running

my-test-app-release-test-app-service-6fc875454b-b7ml9 Running

my-test-app-release-test-app-service-745b85d746-mgqps Pending

Note that this also includes running pods from the previous release. Ideally we'd filter those out, but I've never bothered working that out, as they don't cause any issues!

Once we have all the release pods, we check to see if any are Failed. If they are, we're done, and the release has failed.

Otherwise, we check to see if any of the pods are not Running or Succeeded. If, as in the previous example, some of the pods are still Pending (because the init containers are blocking) then we sleep for 10s. After that we check again.

If all the pods are in the Running or Succeeded state - we're done, the release was a success! If that never happens, eventually we time out, and exit in a failed state.

# Timeout after 20 mins (to leave time for migrations)

timeout=120

counter=0

# While $counter < $timeout

while [ $counter -lt $timeout ]; do

# Fetch all pods tagged with the release

release_pods="$(kubectl get pods \

-l "app.kubernetes.io/instance=${RELEASE_NAME}" \

-o 'custom-columns=NAME:.metadata.name,STATUS:.status.phase' \

-n "${NAMESPACE}" \

--context "${KUBE_CONTEXT}" \

--no-headers \

)"

# If we have any failures, then the release failed

if echo "${release_pods}" | grep -qE 'Failed'; then

echo "LOG: ${RELEASE_NAME} failed. Check the pod logs."

exit 1

fi

# Are any of the pods _not_ in the Running/Succeeded status?

if echo "${release_pods}" | grep -qvE 'Running|Succeeded'; then

echo "${release_pods}" | grep -vE 'Running|Succeeded'

echo "${RELEASE_NAME} pods not ready. ${counter}/${timeout} checks completed; retrying."

# NOTE: The pre-increment usage. This makes the arithmatic expression

# always exit 0. The post-increment form exits non-zero when counter

# is zero. More information here: http://wiki.bash-hackers.org/syntax/arith_expr#arithmetic_expressions_and_return_codes

((++counter))

sleep 10

else

#All succeeded, we're done!

echo "${release_pods}"

echo "LOG: All ${RELEASE_NAME} pods running. Done!"

exit 0

fi

done

# We timed out

echo "LOG: Release ${RELEASE_NAME} did not complete in time" 1>&2

exit 1

Obviously this isn't very elegant. We're polling repeatedly, trying to infer whether everything completed successfully. Eventually (20 mins in this case), we throw up our hands and say "it's clearly not going to happen!". I wish I had a better answer for you, but right now, it's the best I've got 🙂

Putting it all together

Lets put it all together now. Copy the following into a file called deploy_and_wait.sh and give execute permissions to the file using chmod +x ./deploy_and_wait.sh.

#!/bin/bash

set -euo pipefail

# Required Variables:

[ -z "$CHART" ] && echo "Need to set CHART" && exit 1;

[ -z "$RELEASE_NAME" ] && echo "Need to set RELEASE_NAME" && exit 1;

[ -z "$NAMESPACE" ] && echo "Need to set NAMESPACE" && exit 1;

# Set the helm context to the same as the kubectl context

KUBE_CONTEXT=$(kubectl config current-context)

# Install/upgrade the chart

helm upgrade --install \

$RELEASE_NAME \

$CHART \

--kube-context "${KUBE_CONTEXT}" \

--namespace="$NAMESPACE" \

$HELM_ARGS

echo 'LOG: Watching for successful release...'

# Timeout after 6 repeats = 60 seconds

release_timeout=6

counter=0

# Loop while $counter < $release_timeout

while [ $counter -lt $release_timeout ]; do

# Fetch a list of release names

releases="$(helm ls -q --kube-context "${KUBE_CONTEXT}")"

# Check if $releases contains RELEASE_NAME

if ! echo "${releases}" | grep -qF "${RELEASE_NAME}"; then

echo "${releases}"

echo "LOG: ${RELEASE_NAME} not found. ${counter}/${release_timeout} checks completed; retrying."

# NOTE: The pre-increment usage. This makes the arithmatic expression

# always exit 0. The post-increment form exits non-zero when counter

# is zero. More information here: http://wiki.bash-hackers.org/syntax/arith_expr#arithmetic_expressions_and_return_codes

((++counter))

sleep 10

else

# Our release is there, we can stop checking

break

fi

done

if [ $counter -eq $release_timeout ]; then

echo "LOG: ${RELEASE_NAME} failed to appear." 1>&2

exit 1

fi

# Timeout after 20 mins (to leave time for migrations)

timeout=120

counter=0

# While $counter < $timeout

while [ $counter -lt $timeout ]; do

# Fetch all pods tagged with the release

release_pods="$(kubectl get pods \

-l "app.kubernetes.io/instance=${RELEASE_NAME}" \

-o 'custom-columns=NAME:.metadata.name,STATUS:.status.phase' \

-n "${NAMESPACE}" \

--context "${KUBE_CONTEXT}" \

--no-headers \

)"

# If we have any failures, then the release failed

if echo "${release_pods}" | grep -qE 'Failed'; then

echo "LOG: ${RELEASE_NAME} failed. Check the pod logs."

exit 1

fi

# Are any of the pods _not_ in the Running/Succeeded status?

if echo "${release_pods}" | grep -qvE 'Running|Succeeded'; then

echo "${release_pods}" | grep -vE 'Running|Succeeded'

echo "${RELEASE_NAME} pods not ready. ${counter}/${timeout} checks completed; retrying."

# NOTE: The pre-increment usage. This makes the arithmatic expression

# always exit 0. The post-increment form exits non-zero when counter

# is zero. More information here: http://wiki.bash-hackers.org/syntax/arith_expr#arithmetic_expressions_and_return_codes

((++counter))

sleep 10

else

#All succeeded, we're done!

echo "${release_pods}"

echo "LOG: All ${RELEASE_NAME} pods running. Done!"

exit 0

fi

done

# We timed out

echo "LOG: Release ${RELEASE_NAME} did not complete in time" 1>&2

exit 1

Now, build your app, push the Docker images to your docker repository, ensure the chart is accessible, and run the deploy_and_wait.sh script using something similar to the following:

CHART="my-chart-repo/test-app" \

RELEASE_NAME="my-test-app-release" \

NAMESPACE="local" \

HELM_ARGS="--set test-app-cli.image.tag=1.0.0 \

--set test-app-api.image.tag=1.0.0 \

--set test-app-service.image.tag=1.0.0 \

" \

./deploy_and_wait.sh

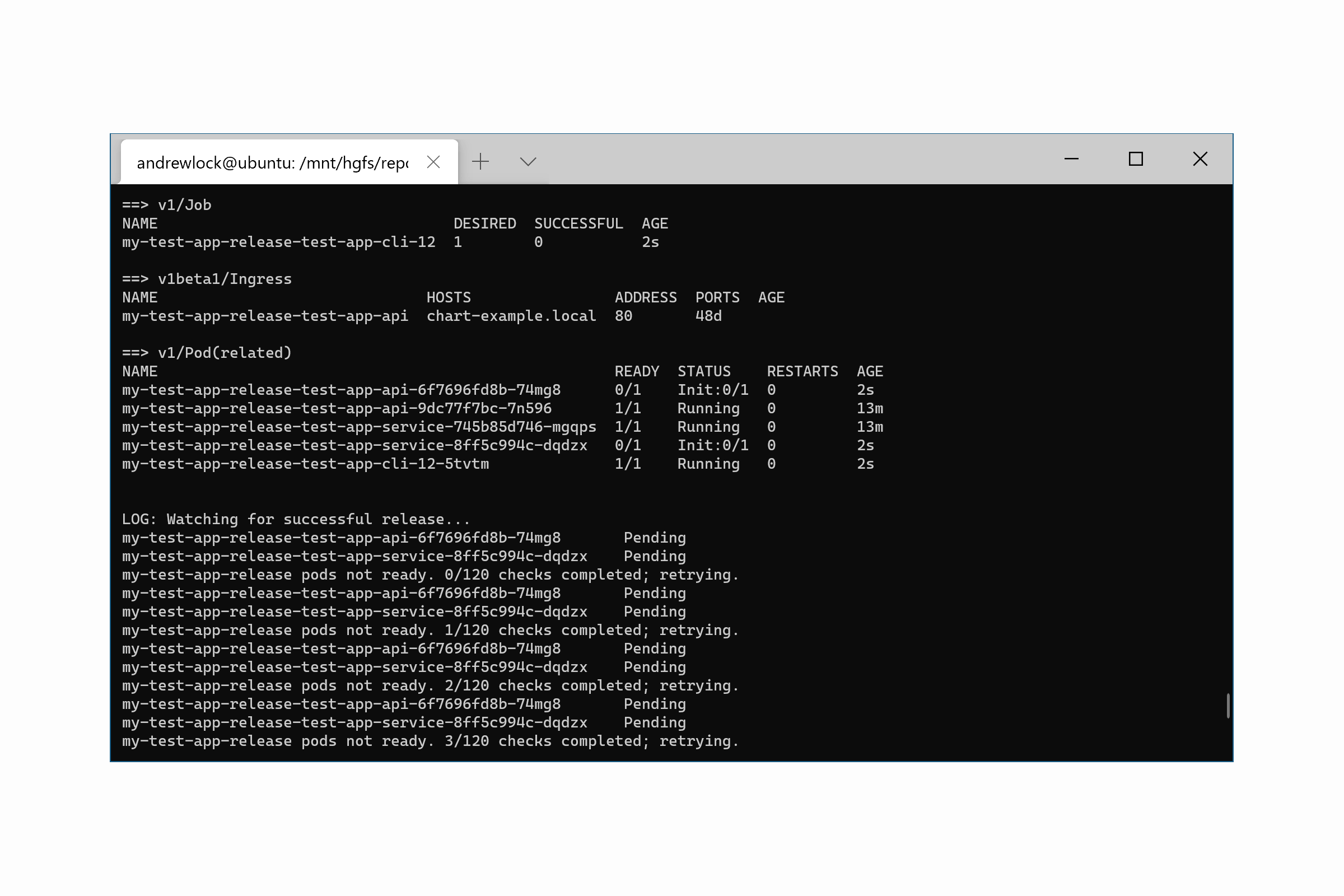

When you run this, you'll see something like the following. You can see Helm install the chart, and then the rest our script kicks in, waiting for the CLI migrations to finish. When they do, and our application pods start, the release is complete, and the script exits.

Release "my-test-app-release" has been upgraded. Happy Helming!

LAST DEPLOYED: Sat Oct 10 11:53:37 2020

NAMESPACE: local

STATUS: DEPLOYED

RESOURCES:

==> v1/Service

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

my-test-app-release-test-app-api ClusterIP 10.102.15.166 <none> 80/TCP 48d

my-test-app-release-test-app-service ClusterIP 10.104.28.71 <none> 80/TCP 48d

==> v1/Deployment

NAME DESIRED CURRENT UP-TO-DATE AVAILABLE AGE

my-test-app-release-test-app-api 1 2 1 1 48d

my-test-app-release-test-app-service 1 2 1 1 48d

==> v1/Job

NAME DESIRED SUCCESSFUL AGE

my-test-app-release-test-app-cli-12 1 0 2s

==> v1beta1/Ingress

NAME HOSTS ADDRESS PORTS AGE

my-test-app-release-test-app-api chart-example.local 80 48d

==> v1/Pod(related)

NAME READY STATUS RESTARTS AGE

my-test-app-release-test-app-api-6f7696fd8b-74mg8 0/1 Init:0/1 0 2s

my-test-app-release-test-app-api-9dc77f7bc-7n596 1/1 Running 0 13m

my-test-app-release-test-app-service-745b85d746-mgqps 1/1 Running 0 13m

my-test-app-release-test-app-service-8ff5c994c-dqdzx 0/1 Init:0/1 0 2s

my-test-app-release-test-app-cli-12-5tvtm 1/1 Running 0 2s

LOG: Watching for successful release...

my-test-app-release-test-app-api-6f7696fd8b-74mg8 Pending

my-test-app-release-test-app-service-8ff5c994c-dqdzx Pending

my-test-app-release pods not ready. 0/120 checks completed; retrying.

my-test-app-release-test-app-api-6f7696fd8b-74mg8 Pending

my-test-app-release-test-app-service-8ff5c994c-dqdzx Pending

my-test-app-release pods not ready. 1/120 checks completed; retrying.

my-test-app-release-test-app-api-6f7696fd8b-74mg8 Pending

my-test-app-release-test-app-service-8ff5c994c-dqdzx Pending

my-test-app-release pods not ready. 2/120 checks completed; retrying.

my-test-app-release-test-app-api-6f7696fd8b-74mg8 Pending

my-test-app-release-test-app-service-8ff5c994c-dqdzx Pending

my-test-app-release pods not ready. 3/120 checks completed; retrying.

my-test-app-release-test-app-api-6f7696fd8b-74mg8 Running

my-test-app-release-test-app-api-9dc77f7bc-7n596 Running

my-test-app-release-test-app-cli-12-5tvtm Succeeded

my-test-app-release-test-app-service-745b85d746-mgqps Running

my-test-app-release-test-app-service-8ff5c994c-dqdzx Running

LOG: All my-test-app-release pods running. Done!

You can use the exit code of the script to trigger other processes, depending on how you choose to monitor your deployments. We use Octopus Deploy to trigger our deployments, which runs this script. If the release is installed successfully, the Octopus deployment is successful, if not it fails. What more could you want! 🙂

Octopus has built-in support for both Kubernetes and Helm, but they didn't when we first started this approach. Even so, there's something nice about deployments being completely portable bash scripts, rather than being tied to a specific vendor.

I'm not suggesting this is the best approach to deploying (I'm very interested in a GitOps approach such as Argo CD), but it's worked for us for some time, so I thought I'd share. Tooling has been getting better and better around Kubernetes the last few years, so I'm sure there's already a better approach out there! If you are using a different approach, let me know in the comments.

Summary

In this post I showed how to monitor a deployment that runs database migrations using Kubernetes jobs and init containers. The approach I show in this post uses a bash script to poll the state of the pods in the release, waiting for them all to move to the Succeeded or Running status.