In this post I describe how I used the azcopy command-line tool to backup some files to Azure blob storage. This is somewhat outside my comfort-zone, so I'm most posting it in the hope people will drop suggestions/advice in the comments for things I could improve! 😃

Backing up photos with Immich

A short while ago, I decided I need to set up a proper backup solution for my girlfriend and my photos (let's be honest, 99% of the photos are of our dog…😂). All the photos are already backed up to Google Photos, and we have various adhoc backups of the files on our laptops (which are in-turn backed up), but I wanted something more systematic. In short, I wanted a self-hosted version of Google Photos.

I really like Google Photos, but the potential for having our entire photo history deleted if Google took it upon themselves to shut down our accounts does worry me. Obviously it would have other wide-ranging impacts too, but the loss of the photos worries me more than anything else.

As luck would have it, the open source Immich project aims to be exactly that: it's a Google Photos clone that you can self-host! And while it's still in the "development" phase it's really, really good 😄 You don't have to take my word for it - they have a demo portal available to try on the web, or you can stand it up yourself.

I'm not going to describe how to setup Immich in this post as the instructions will likely go out of date. Instead, I suggest checking out the excellent docs. It's also important to check the release notes on GitHub for each release while it's still in the "developmental" phase.

Initially I thought I would set up a VM in Azure and host Immich there, with the intention of using some of my Microsoft MVP credits, but I didn't count on just how expensive that is. Even running a mediocre VM burned through my credits in less than a week 😅

In hindsight, I should have known using a VM wouldn't be effective. One of the common complaints of "traditional" lift-and-shift migrations to the cloud that use VMs is that it's so expensive. To use the cloud efficiently you really have to take advantage the compute/storage-as-a-service offerings.

Instead, I decided to order a refurbed mini-PC, put a 1TB SSD in it, and stuck it in the basement. Cost-wise this is far more efficient, and obviously having a local machine has advantages. The big downside was that I really wanted an offsite backup for the photos. After exploring a few options, I decided an easy approach would be to backup the files to Azure blob storage instead.

Trying to understand Azure Blob Storage pricing

After being burned by the price of VMs I figured I would check the costs of blob storage ahead of time. And what-do-you-know, there's a Azure Blob Storage pricing document that covers exactly this 🎉

Unfortunately, as a relative newbie to doing the cloud-management side of things, this page is virtually impenetrable. There are so many variables, with no indication of how to choose between any of the options, no explanation of what they do (so whether I need them or not) that I quickly gave up.

As a side-rant, this is fundamentally my complaint about basically all "cloud" stuff. It's not just Azure; AWS is just as bad. Unless you're immersed in this stuff, it's an overwhelming sea of jargon and options😅

Either way, I was aware there were going to be three main sources of costs:

- Ingress costs. Uploading data to Azure blob storage has a cost associated with the number of write operations performed.

- Storage costs. You pay per GB for the data stored.

- Egress costs. Retrieving/downloading files from Azure blob storage has a cost per read operation, and a cost per GB downloaded (for some storage tiers).

I wasn't really sure exactly which blob storage options I needed but I knew that that

- Most of the ingress costs were going to be "one time" costs. New photos will be added periodically, but they're only low-volume.

- I only had ~350GB photos currently. That would increase over time, but only slowly.

- As this is a backup solution, theoretically I wouldn't need to download the data again other than in an emergency, so wouldn't need to worry about the egress costs.

So I decided to just give it a try, and see what happens. Worst case, it would turn out to be too expensive, I'd burn through my Azure credits, and I'd tear it down. Slightly annoying but nothing more than that.

The next question was how to do the backup. I knew I could write a small .NET app using the Azure SDK if needs be, but a little googling revealed the azcopy utility looked like it would do exactly what I wanted!

Backing up files to Azure blob storage with azcopy

I found the azcopy utility after a little googling and I ran across this example in the Microsoft docs: Synchronize with Azure Blob storage by using AzCopy. This is exactly what I was trying to do—mirror a local directory to Azure blob storage—so in the end it was easy to get started. Before we get to the tool itself, I'll describe what I did to setup Blob storage in Azure.

Creating a storage account for the backup

Azure has multiple different types of storage. You can have blob storage (which I'm using for backing up files in this case), table storage, queues, and various other things. These are grouped together into a storage account, so before we can send anything to blob storage, we need to create the storage account.

I chose the UI-clicky approach to create my storage account, given I don't really know what I'm doing (so the extra UI help is useful) and I don't need to automate it (as I'm only doing it once). I did the following to create the account:

- Navigate to Storage Accounts in the Azure Portal.

- Click Create to create a new storage account. I used the following settings on the Basic tab

- Choose a resource group for the account (or create a new one). The resource group is a "higher level" grouping of Azure resources, which you can use to logically understand which resources are related. In my case, I created a new resource group called

immich-backup-rg - Enter a name for the storage account. I used

immichbackup(as you can only use letters and numbers, no special characters). - Choose a region. Choosing one which is close to you likely makes the most sense.

- For performance I left it at standard as I almost certainly don't need the features and performance that premium provides (at a cost).

- For redundancy I left the selection at geo-redundant storage (GRS) as that provides failover to a separate region. There are additional redundancy options that provide for failover to other zones too, but as this is only a backup scenario, that seemed unnecessary.

- Choose a resource group for the account (or create a new one). The resource group is a "higher level" grouping of Azure resources, which you can use to logically understand which resources are related. In my case, I created a new resource group called

- In the Advanced tab I mostly stuck to the defaults, the only change I made was:

- I changed the blob-storage Access-tier to be cool based on the descriptions (and the pricing differences):

- Cool: Infrequently accessed data and backup scenarios.

- Hot: Frequently accessed data and day-to-day usage scenarios.

- I left everything else as-is. I considered enabling hierarchical namespace but I couldn't quite work out if I needed that or not, or what the implications were, so I left it disabled 🤷♂️😅

- I changed the blob-storage Access-tier to be cool based on the descriptions (and the pricing differences):

- In the Networking tab I left everything as the defaults. That means I left network access as Enable public access from all networks. I didn't want the hassle of fighting with cloud networking when getting started, but we'll revisit this again later!

- Finally, I clicked through to Review and Deploy the storage account, and waited for Azure to do its thing.

Once the storage account was created, it was time to create a container for the blob storage.

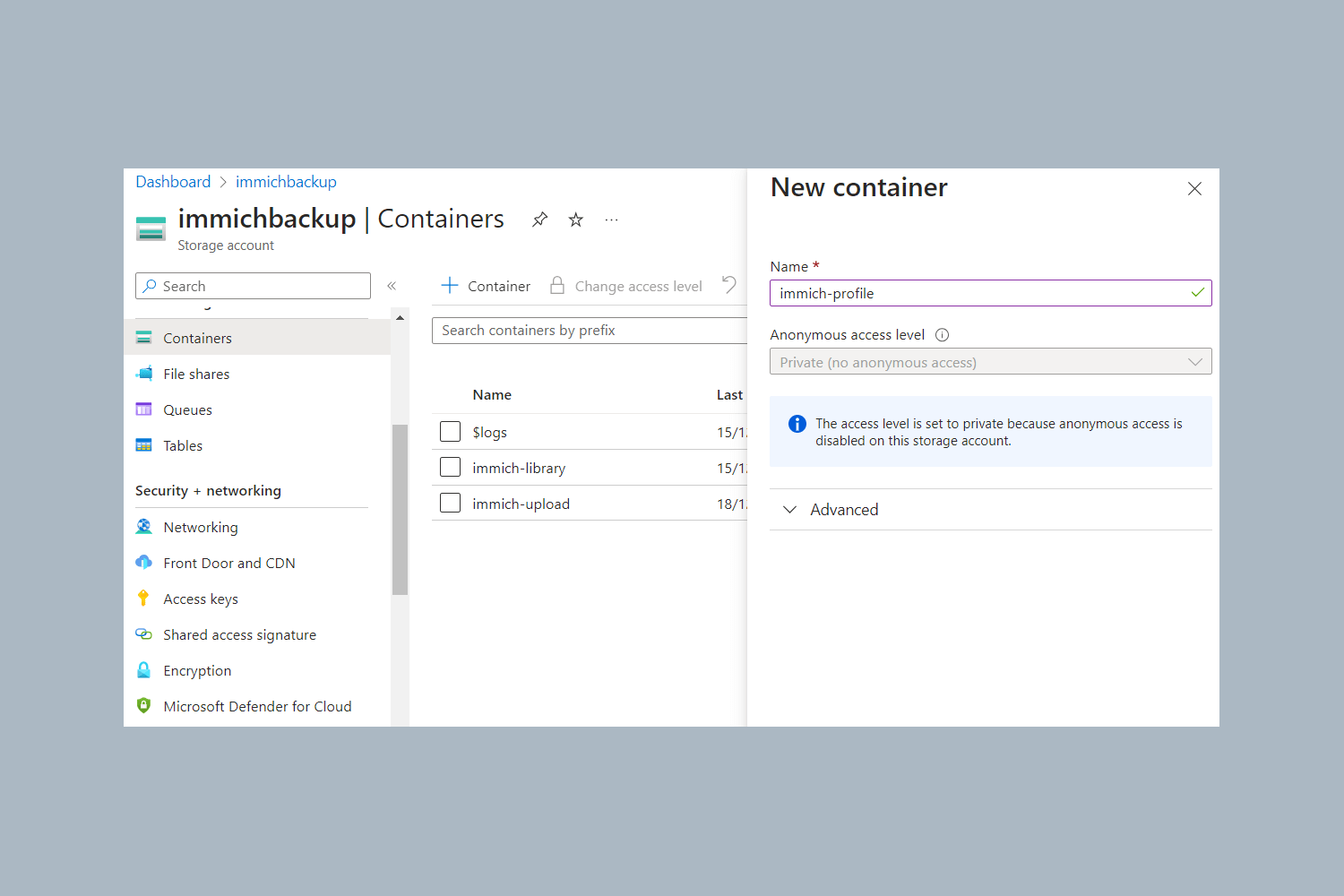

Creating Blob Storage containers

Azure Blob storage divides your storage into containers. For my Immich photo backup, there are (currently) three main folders that need to be backed up (in addition to the database):

UPLOAD_LOCATION/libraryUPLOAD_LOCATION/uploadUPLOAD_LOCATION/profile

These contain original files uploaded to Immich, and so are the files we want to backup. Immich also generates additional content (such as thumbnails), but as these can be re-generated from the original content there's no need to back them up.

I chose to create separate containers for each of these folders. From your new storage account's page, navigate to Containers in the left menu, and click + Container to create a new container. This opens a panel to create a new container. Don't worry, this is much quicker than creating the storage account; I only provided a name and left everything else with the defaults.

We now have everything we need to start uploading to Blob storage.

Downloading azcopy

The azcopy utility is a go command-line utility so you can just download and run it. Links to the latest releases are listed in the docs.

I setup my mini PC as an ubuntu server, so all the commands shown in this post assume you're running on Linux.

I used the following small bash script to download the latest (at the time of writing) version of the azcopy and extract it to the home directory:

cd ~

# Download latest version of azcopy, and un-tar the executable

# Link from https://learn.microsoft.com/en-us/azure/storage/common/storage-use-azcopy-v10#download-azcopy

curl -L 'https://aka.ms/downloadazcopy-v10-linux' | tar -zx -C ~/

# Move the binary from ~/azcopy_linux_amd64_10.22.0/azcopy/azcopy to ~/azcopy

mv ~/azcopy_*/azcopy ~/

# Remove the old directory

rm -rf ~/azcopy_*

You can confirm it was downloaded correctly by running ~/azcopy --version:

$ ~/azcopy --version

azcopy version 10.22.0

Now we've downloaded the tool, we can finally use it to backup our files.

Logging in to azcopy

There are a whole variety of ways to authenticate with Azure using azcopy. The best approach is to use a Microsoft Entra ID account, but this is another place where I tried that, got a frustrating error, and decided to take the easy option instead 😬

You can find the instructions for using a Microsoft Entra ID here. Theoretically this is a lot easier (and obviously more secure) than the approach I show below, but I got more opaque errors and decided to take the short-cut approach instead. I'll probably come back and do this properly at a later date.

Instead of fighting with tenants and service accounts, I decided to use a shared access signature (SAS) URL. These basically have access-token's embedded them, so they allow access to protected resources without using an account. Generally speaking, you should always favour using some sort of user/managed/service account, but they're an easy (dirty) way of getting access, which frankly was all I wanted.

To generate an access token for your container, navigate to your storage account, click the … menu to the right of the storage container you need, and click Generate SAS. This opens up a side-panel:

- Change the permissions to include all the options. I'm not 100% which ones

azcopyactually needs. Because Add, Create, and Write all seem like the same thing to me😅 - Change the expiry time if necessary - that will depend on how fast your network is and how many files you need to upload.

- Click Generate SAS token and URL

This generates a SAS token, and also provides the full URI for the blob storage container, which includes the token. Copy the Blob SAS URL, and save it to a variable, e.g.:

url='https://immichbackup.blob.core.windows.net/immich-library?sp=racwdl&st=2023-12-29T19:24:49Z&se=2023-12-30T03:24:49Z&spr=https&sv=2022-11-02&sr=c&sig=SOMESIGNATURE'

Sync files to blob storage using azcopy

We're finally at the point where we can sync some files with azcopy! The actual command to do so is remarkably simple. Assuming that you have:

- The data you want to backup is stored in

/immich-data/content/library - The SAS URI for the container is stored in the

$urlvariable

then syncing all your data is as simple as running:

~/azcopy sync /immich-data/content/library $url --recursive

Note that you can also add the --dry-run variable to see what azcopy is going to copy before it does it.

And it's as easy as that. My upload speeds are pretty slow, so the initial upload took getting-on for 36 hours, but incrementally uploading any new photos only takes a few seconds!

Exploring the costs

I only setup this backup a couple of weeks ago, but I'm happy enough with how much it looks like this will cost long-term to backup my Immich photos. The initial upload cost approximately £9, and the storage is costing ~£0.20 per day, which works out to ~£6 a month for ~350GB of storage.

That's a lot more expensive than a dedicated backup service like Backblaze would cost, so although this isn't necessarily the best option as a backup cost-wise, it's good enough for me.

Restricting access via IP address

When I initially setup the storage account, I opted for public network access to avoid any potential issues, but I subsequently went back and added a restriction so that it could only be accessed from my home address public IP.

You can get your home public IP address from a Linux shell using

dig +short myip.opendns.com @resolver1.opendns.comfor example.

To do this,

- Go to your Storage Account

- From the left menu choose Networking

- On the first tab, under Public network access, select Enabled from selected virtual networks and IP addresses

- Under Firewall, click Add your client IP address ('83.17.219.240') to add your home address IP as an exclusion. Make sure that this IP address is the same one returned by the

digcommand! - Click Save.

After a short while the firewall is configured, and you should still be able to access your files using azcopy, but they'll be less exposed to the public internet.

What's next?

That's as far as I've got at the moment, but there are some obvious areas for improvement:

- Scripting the backup. Immich requires frequent manual updates at the moment, so I'm currently running the backup scripts whenever I update Immich, but longer-term it would make more sense to have the backup automatically run in the background.

- Authenticating with Entra. Scripting the backup would mean switching to Entra account authentication (as you don't really want to have very-long-lived SAS tokens floating around).

- Backing up the Immich database. It would be nice to include a backup of the Immich postgres database. I'm most concerned with backing up the photo data rather than Immich itself at the moment, but the more I use Immich, the more I will want this I think!

Summary

In this post I described how I backed up my Immich photos to an Azure storage account using the azcopy utility. It's probably not a best practice for anything, but it's what I did for now, and is something I can improve on in the future!