Clik here to view.

In this post I describe how we tracked down a hanging xUnit test in our CI build. To achieve that, we wanted to have xUnit log which tests were running. This proved much harder than we had planned, and ended with us creating a custom XunitTestFramework implementation. This post shares our pain with the world.

The problem: a hanging test in CI

In the Datadog APM tracer library we run a lot of tests. We obviously have lots of unit tests which run quickly and rarely have issues. But because of the nature of the APM tracer, where we hook deep into the CLR Profiler APIs, we run a lot of integration tests, in which we instrument sample applications and confirm their behaviour is as expected.

These tests are expensive to run, as each test requires starting a separate process, hooking up the tracer, and running expected behaviour (such as sending web requests, accessing a database, and so on). On top of that, as these are real applications, with all the concurrency and edge cases that goes along with that, sometimes, a test will hang.

The trouble is that sometimes a test hangs in CI. And by default, you won't know which one. xUnit doesn't list which test is running. And if you can't replicate the issue locally in an IDE, then how can we even know which test was hanging? 🤔

This seemed like it should be something simple to enable: a log message when a test starts, and another one when a test ends. That way we could track down the culprit that was hanging. It wasn't quite as simple as we hoped.

Creating a custom test framework in xUnit

xUnit takes a very opinionated view as a test framework. It provides the minimal features required for a framework, and leaves the rest up to you. There are lots of ways to extend and plug in to the framework, but the framework doesn't give you a huge amount out of the box.

My colleague Kevin Gosse is the one to thank/blame for this code, which can be found on GitHub

After much hunting we (Kevin) established that the only way to log when a test starts and finishes is to write a custom test framework for xUnit.

In this section, I'll talk through all the layers required to achieve this, but as a quick test, I created a new project using the .NET CLI by running:

dotnet new xunit -n XunitCustomFrameworkTests

dotnet new sln

dotnet sln add ./XunitCustomFramework.csproj

I then added a simple test:

using Xunit;

namespace XunitCustomFrameworkTests;

public class CalculatorTests

{

[Fact]

public void ItWorks()

{

Assert.True(true);

}

}

Now we have a test project, lets set about logging when a method is starting and finishing

Creating the custom TestFramework

The TestFramework in xUnit is responsible for discovering and executing all the tests in your application. The default implementation is XunitTestFramework, but you can create your own test framework and "register" it with xUnit by adding an [assembly:Xunit.TestFramework] attribute.

The following creates our CustomTestFramework by deriving from the default XunitTestFramework.

using Xunit.Abstractions;

using Xunit.Sdk;

namespace XunitCustomFrameworkTests;

public class CustomTestFramework : XunitTestFramework

{

public CustomTestFramework(IMessageSink messageSink)

: base(messageSink)

{

}

}

The IMessageSink is an important interface that we will use to write messages to the console outside the context of a test. We'll use it to log when a test starts, and when it stops.

If you want to write messages to test output from inside a test, you should use the

ITestOutputHelperinterface, and inject it into your test class constructors.

Xunit won't use the CustomTestFramework automatically, you need to add the [TestFramework] attribute to the assembly. For example:

[assembly:Xunit.TestFramework("XunitCustomFrameworkTests.CustomTestFramework", "XunitCustomFrameworkTests")]

Note that you must place assembly attributes outside namespace declarations. With C#10's new namespace declarations, it's easy to get them the wrong way around!

Adding a diagnostic message

With the CustomTestFramework in place, lets add a message to the constructor, to confirm that we're using the type as expected:

public CustomTestFramework(IMessageSink messageSink)

: base(messageSink)

{

messageSink.OnMessage(new DiagnosticMessage("Using CustomTestFramework"));

}

To write a message, you must create a DiagnosticMessage, and pass it to the IMessageSink.OnMessage method. We can test it out by running dotnet test:

> dotnet test --no-build --nologo --no-restore

Test run for C:\repos\blog-examples\XunitCustomFramework\bin\Debug\net6.0\XunitCustomFramework.dll (.NETCoreApp,Version=v6.0)

Starting test execution, please wait...

A total of 1 test files matched the specified pattern.

Passed! - Failed: 0, Passed: 1, Skipped: 0, Total: 11, Duration: 11 ms - XunitCustomFramework.dll (net6.0)

Hmmm, there's no diagnostic messages there, which indicates the CustomTestFramework isn't being called. That's because diagnostic messages have to be enabled in xUnit's configuration.

Enabling diagnostic messages

The suggested approach to configuring xUnit is to use an xunit.runner.json file that is copied to your build output. Create the file xunit.runner.json in the root of your test project and add the following:

{

"$schema": "https://xunit.net/schema/current/xunit.runner.schema.json",

"diagnosticMessages": true

}

The $schema key enables IntelliSense for most editors in the file, and we've also enabled diagnostic messages. You should make sure the json file is set to be copied to your build output, so make sure you have the following <ItemGroup> in your csproj file:

<ItemGroup>

<None Update="xunit.runner.json" CopyToOutputDirectory="PreserveNewest" />

</ItemGroup>

Now if we run dotnet test we can see the diagnostic message

> dotnet test

Test run for C:\repos\blog-examples\XunitCustomFramework\bin\Debug\net6.0\XunitCustomFramework.dll (.NETCoreApp,Version=v6.0)

Starting test execution, please wait...

A total of 1 test files matched the specified pattern.

[xUnit.net 00:00:00.43] XunitCustomFramework: Using CustomTestFramework

Passed! - Failed: 0, Passed: 1, Skipped: 0, Total: 11, Duration: 11 ms - XunitCustomFramework.dll (net6.0)

OK, now we know we're hooking in correctly, we can go about achieving our goal: logging when each test starts and finishes.

Creating a custom TestMethodRunner

The TestFramework is the "top-level" piece of the equation that we need to replace, but I'm going to jump to the lowest level piece now, the custom TestMethodRunner. The RunTestCaseAsync method on this class is invoked once for every test case (a [Fact], or a single case of a [Theory] test), so it provides a good place for us to log the behaviour.

For simplicity we can derive from the default implementation, XunitTestMethodRunner, and override the RunTestCaseAsync() method to add the behaviour we need. The following annotated code shows how to do this:

class CustomTestMethodRunner : XunitTestMethodRunner

{

private readonly IMessageSink _diagnosticMessageSink;

// We need to pass all the injected values into the base constructor

public CustomTestMethodRunner(ITestMethod testMethod, IReflectionTypeInfo @class, IReflectionMethodInfo method, IEnumerable<IXunitTestCase> testCases, IMessageSink diagnosticMessageSink, IMessageBus messageBus, ExceptionAggregator aggregator, CancellationTokenSource cancellationTokenSource, object[] constructorArguments)

: base(testMethod, @class, method, testCases, diagnosticMessageSink, messageBus, aggregator, cancellationTokenSource, constructorArguments)

{

_diagnosticMessageSink = diagnosticMessageSink;

}

protected override async Task<RunSummary> RunTestCaseAsync(IXunitTestCase testCase)

{

// Create a text representation of the test parameters (for theory tests)

var parameters = string.Empty;

if (testCase.TestMethodArguments != null)

{

parameters = string.Join(", ", testCase.TestMethodArguments.Select(a => a?.ToString() ?? "null"));

}

// Build the full name of the test (class + method + parameters)

var test = $"{TestMethod.TestClass.Class.Name}.{TestMethod.Method.Name}({parameters})";

// Write a log to the output that we're starting the test

_diagnosticMessageSink.OnMessage(new DiagnosticMessage($"STARTED: {test}"));

try

{

// Execute the test and get the result

var result = await base.RunTestCaseAsync(testCase);

// Work out the final status of the test

var status = result.Failed > 0

? "FAILURE"

: (result.Skipped > 0 ? "SKIPPED" : "SUCCESS");

// Write the result of the test to the output

_diagnosticMessageSink.OnMessage(new DiagnosticMessage($"{status}: {test} ({result.Time}s)"));

return result;

}

catch (Exception ex)

{

// Something went wrong trying to execute the test

_diagnosticMessageSink.OnMessage(new DiagnosticMessage($"ERROR: {test} ({ex.Message})"));

throw;

}

}

}

Hopefully the code in the snippet above is fairly easy to understand. The main question now is how to plug this into the CustomTestFramework previously. This is where things get a bit messy.

Creating the test framework class hierarchy

Currently we have implemented:

CustomTestFramework(an implementation ofTestFramework)CustomTestMethodRunner(an implementation ofTestMethodRunner<IXunitTestCase>)

But to connect them, so that our CustomTestFramework uses the CustomTestMethodRunner, we also need to create the following types:

CustomExecutor(an implementation ofTestFrameworkExecutor<IXunitTestCase>)CustomAssemblyRunner(an implementation ofTestAssemblyRunner<IXunitTestCase>)CustomTestCollectionRunner(an implementation ofTestCollectionRunner<IXunitTestCase>)CustomTestClassRunner(an implementation ofTestClassRunner<IXunitTestCase>)

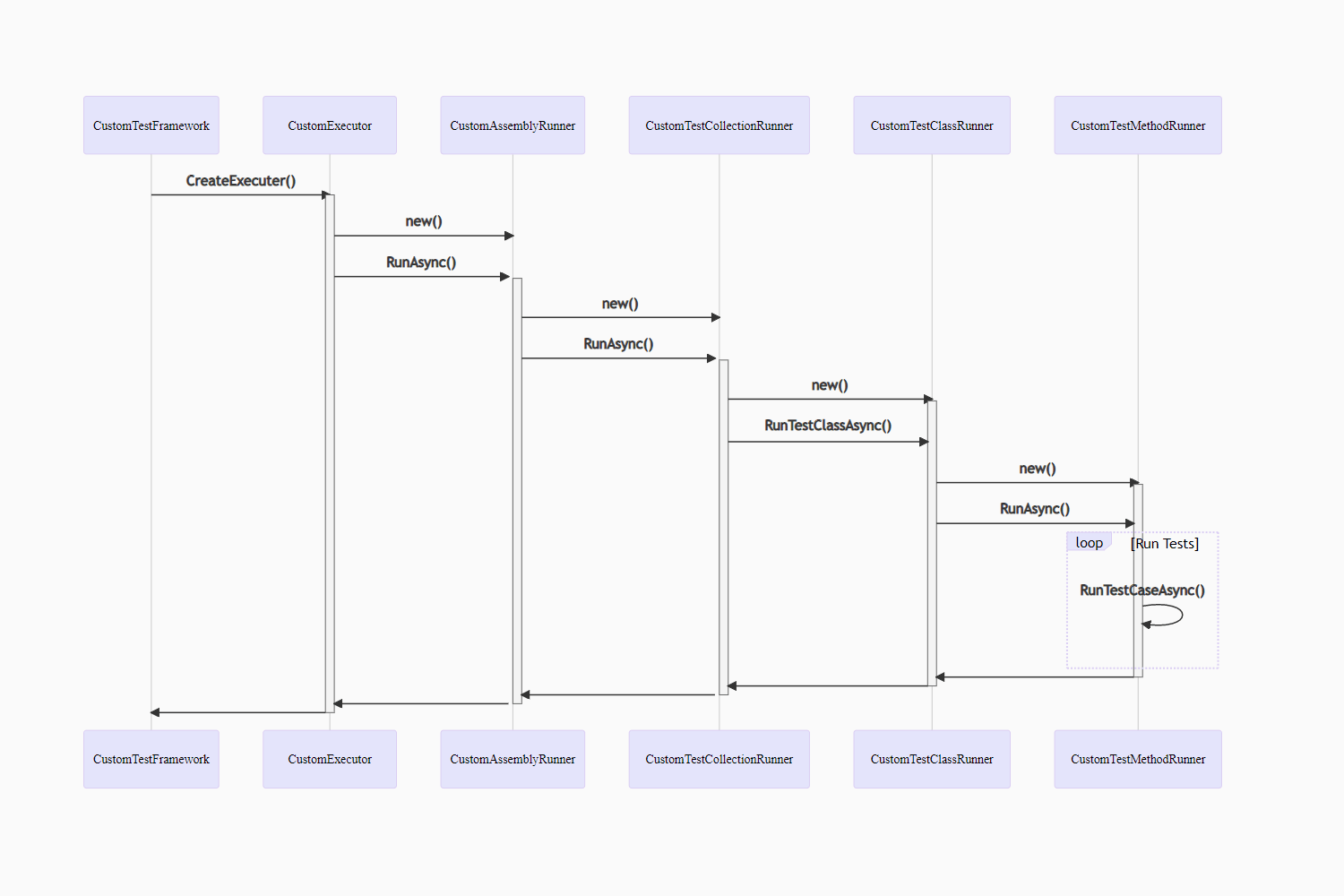

The CustomTestFramework creates the CustomExecutor, which creates the CustomAsssemblyRunner, which creates the CustomTestCollectionRunner, which creates a CustomTestClassRunner which finally create the CustomTestMethodRunner! The sequence diagram looks something like this:

Image may be NSFW.

Clik here to view.

This is all boiler plate, there's nothing custom there, but due to the way the class structures are designed we have to implement all of them. The final complete hierarchy should look something like this:

public class CustomTestFramework : XunitTestFramework

{

public CustomTestFramework(IMessageSink messageSink)

: base(messageSink)

{

messageSink.OnMessage(new DiagnosticMessage("Using CustomTestFramework"));

}

protected override ITestFrameworkExecutor CreateExecutor(AssemblyName assemblyName)

=> new CustomExecutor(assemblyName, SourceInformationProvider, DiagnosticMessageSink);

private class CustomExecutor : XunitTestFrameworkExecutor

{

public CustomExecutor(AssemblyName assemblyName, ISourceInformationProvider sourceInformationProvider, IMessageSink diagnosticMessageSink)

: base(assemblyName, sourceInformationProvider, diagnosticMessageSink)

{

}

protected override async void RunTestCases(IEnumerable<IXunitTestCase> testCases, IMessageSink executionMessageSink, ITestFrameworkExecutionOptions executionOptions)

{

using var assemblyRunner = new CustomAssemblyRunner(TestAssembly, testCases, DiagnosticMessageSink, executionMessageSink, executionOptions);

await assemblyRunner.RunAsync();

}

}

private class CustomAssemblyRunner : XunitTestAssemblyRunner

{

public CustomAssemblyRunner(ITestAssembly testAssembly, IEnumerable<IXunitTestCase> testCases, IMessageSink diagnosticMessageSink, IMessageSink executionMessageSink, ITestFrameworkExecutionOptions executionOptions)

: base(testAssembly, testCases, diagnosticMessageSink, executionMessageSink, executionOptions)

{

}

protected override Task<RunSummary> RunTestCollectionAsync(IMessageBus messageBus, ITestCollection testCollection, IEnumerable<IXunitTestCase> testCases, CancellationTokenSource cancellationTokenSource)

=> new CustomTestCollectionRunner(testCollection, testCases, DiagnosticMessageSink, messageBus, TestCaseOrderer, new ExceptionAggregator(Aggregator), cancellationTokenSource).RunAsync();

}

private class CustomTestCollectionRunner : XunitTestCollectionRunner

{

public CustomTestCollectionRunner(ITestCollection testCollection, IEnumerable<IXunitTestCase> testCases, IMessageSink diagnosticMessageSink, IMessageBus messageBus, ITestCaseOrderer testCaseOrderer, ExceptionAggregator aggregator, CancellationTokenSource cancellationTokenSource)

: base(testCollection, testCases, diagnosticMessageSink, messageBus, testCaseOrderer, aggregator, cancellationTokenSource)

{

}

protected override Task<RunSummary> RunTestClassAsync(ITestClass testClass, IReflectionTypeInfo @class, IEnumerable<IXunitTestCase> testCases)

=> new CustomTestClassRunner(testClass, @class, testCases, DiagnosticMessageSink, MessageBus, TestCaseOrderer, new ExceptionAggregator(Aggregator), CancellationTokenSource, CollectionFixtureMappings)

.RunAsync();

}

private class CustomTestClassRunner : XunitTestClassRunner

{

public CustomTestClassRunner(ITestClass testClass, IReflectionTypeInfo @class, IEnumerable<IXunitTestCase> testCases, IMessageSink diagnosticMessageSink, IMessageBus messageBus, ITestCaseOrderer testCaseOrderer, ExceptionAggregator aggregator, CancellationTokenSource cancellationTokenSource, IDictionary<Type, object> collectionFixtureMappings)

: base(testClass, @class, testCases, diagnosticMessageSink, messageBus, testCaseOrderer, aggregator, cancellationTokenSource, collectionFixtureMappings)

{

}

protected override Task<RunSummary> RunTestMethodAsync(ITestMethod testMethod, IReflectionMethodInfo method, IEnumerable<IXunitTestCase> testCases, object[] constructorArguments)

=> new CustomTestMethodRunner(testMethod, this.Class, method, testCases, this.DiagnosticMessageSink, this.MessageBus, new ExceptionAggregator(this.Aggregator), this.CancellationTokenSource, constructorArguments)

.RunAsync();

}

private class CustomTestMethodRunner : XunitTestMethodRunner

{

private readonly IMessageSink _diagnosticMessageSink;

public CustomTestMethodRunner(ITestMethod testMethod, IReflectionTypeInfo @class, IReflectionMethodInfo method, IEnumerable<IXunitTestCase> testCases, IMessageSink diagnosticMessageSink, IMessageBus messageBus, ExceptionAggregator aggregator, CancellationTokenSource cancellationTokenSource, object[] constructorArguments)

: base(testMethod, @class, method, testCases, diagnosticMessageSink, messageBus, aggregator, cancellationTokenSource, constructorArguments)

{

_diagnosticMessageSink = diagnosticMessageSink;

}

protected override async Task<RunSummary> RunTestCaseAsync(IXunitTestCase testCase)

{

var parameters = string.Empty;

if (testCase.TestMethodArguments != null)

{

parameters = string.Join(", ", testCase.TestMethodArguments.Select(a => a?.ToString() ?? "null"));

}

var test = $"{TestMethod.TestClass.Class.Name}.{TestMethod.Method.Name}({parameters})";

_diagnosticMessageSink.OnMessage(new DiagnosticMessage($"STARTED: {test}"));

try

{

var result = await base.RunTestCaseAsync(testCase);

var status = result.Failed > 0

? "FAILURE"

: (result.Skipped > 0 ? "SKIPPED" : "SUCCESS");

_diagnosticMessageSink.OnMessage(new DiagnosticMessage($"{status}: {test} ({result.Time}s)"));

return result;

}

catch (Exception ex)

{

_diagnosticMessageSink.OnMessage(new DiagnosticMessage($"ERROR: {test} ({ex.Message})"));

throw;

}

}

}

}

With the hierarchy complete, if we now run dotnet test, we can see each test executing and completing:

> dotnet test

Test run for C:\repos\blog-examples\XunitCustomFramework\bin\Debug\net6.0\XunitCustomFramework.dll (.NETCoreApp,Version=v6.0)

Starting test execution, please wait...

A total of 1 test files matched the specified pattern.

[xUnit.net 00:00:00.43] XunitCustomFramework: Using CustomTestFramework

[xUnit.net 00:00:01.11] XunitCustomFramework: STARTED: XunitCustomFrameworkTests.CalculatorTests.ItWorks()

[xUnit.net 00:00:01.13] XunitCustomFramework: SUCCESS: XunitCustomFramework.CalculatorTests.ItWorks() (0.0075955s)

Passed! - Failed: 0, Passed: 1, Skipped: 0, Total: 11, Duration: 11 ms - XunitCustomFramework.dll (net6.0)

With the custom test framework in place, we can now see which test has started and not finished when something hangs in CI.

Making it easier to identify hanging tests

Using the custom test framework to track currently executing tests is undoubtedly useful, but it still requires you to parse the output and try and spot which of the (potentially thousands) of tests doesn't have a matching end log.

We can improve on this somewhat by starting a timer for each test, and logging an explicit warning if the tests lasts for two long.

For example, we can update the CustomTestMethodRunner.RunTestCaseAsync() method to start a timer just before calling base.RunTestCaseAsync():

var deadlineMinutes = 2;

using var timer = new Timer(

_ => _diagnosticMessageSink.OnMessage(new DiagnosticMessage($"WARNING: {test} has been running for more than {deadlineMinutes} minutes")),

null,

TimeSpan.FromMinutes(deadlineMinutes),

Timeout.InfiniteTimeSpan);

If the test runs longer than the deadline (2 minutes in the example above), a warning is logged to the output. For example, if we create an intentionally long running test:

public class CalculatorTests

{

[Fact]

public void VerySlowTest()

{

Thread.Sleep(TimeSpan.FromMinutes(3));

}

}

Then when we run dotnet test, we'll see a warning logged to the output:

> dotnet test

Test run for C:\repos\blog-examples\XunitCustomFramework\bin\Debug\net6.0\XunitCustomFramework.dll (.NETCoreApp,Version=v6.0)

Starting test execution, please wait...

A total of 1 test files matched the specified pattern.

[xUnit.net 00:00:00.43] XunitCustomFramework: Using CustomTestFramework

[xUnit.net 00:00:00.73] XunitCustomFramework: STARTED: XunitCustomFramework.CalculatorTests.VerySlowTest()

[xUnit.net 00:02:00.74] XunitCustomFramework: WARNING: XunitCustomFramework.CalculatorTests.VerySlowTest() has been running for more than 2 minutes

Now it's much easier to spot which of the tests has hung in CI without having to parse through a long list of started and completed tests.

Summary

In this post, I described how to create a custom xUnit TestFramework. This lets you insert "hooks" into the test running process, so you can keep track of which tests are running and which have completed. To help further, we can track when tests are running for longer than some threshold by starting a timer for each test, and logging a warning. The whole process is rather more work than I'd like, but hopefully this will help if you need to do something similar!